Backside line: Increasingly more AI corporations say their fashions can cause. Two latest research say in any other case. When requested to indicate their logic, most fashions flub the duty – proving they don’t seem to be reasoning a lot as rehashing patterns. The outcome: assured solutions, however not clever ones.

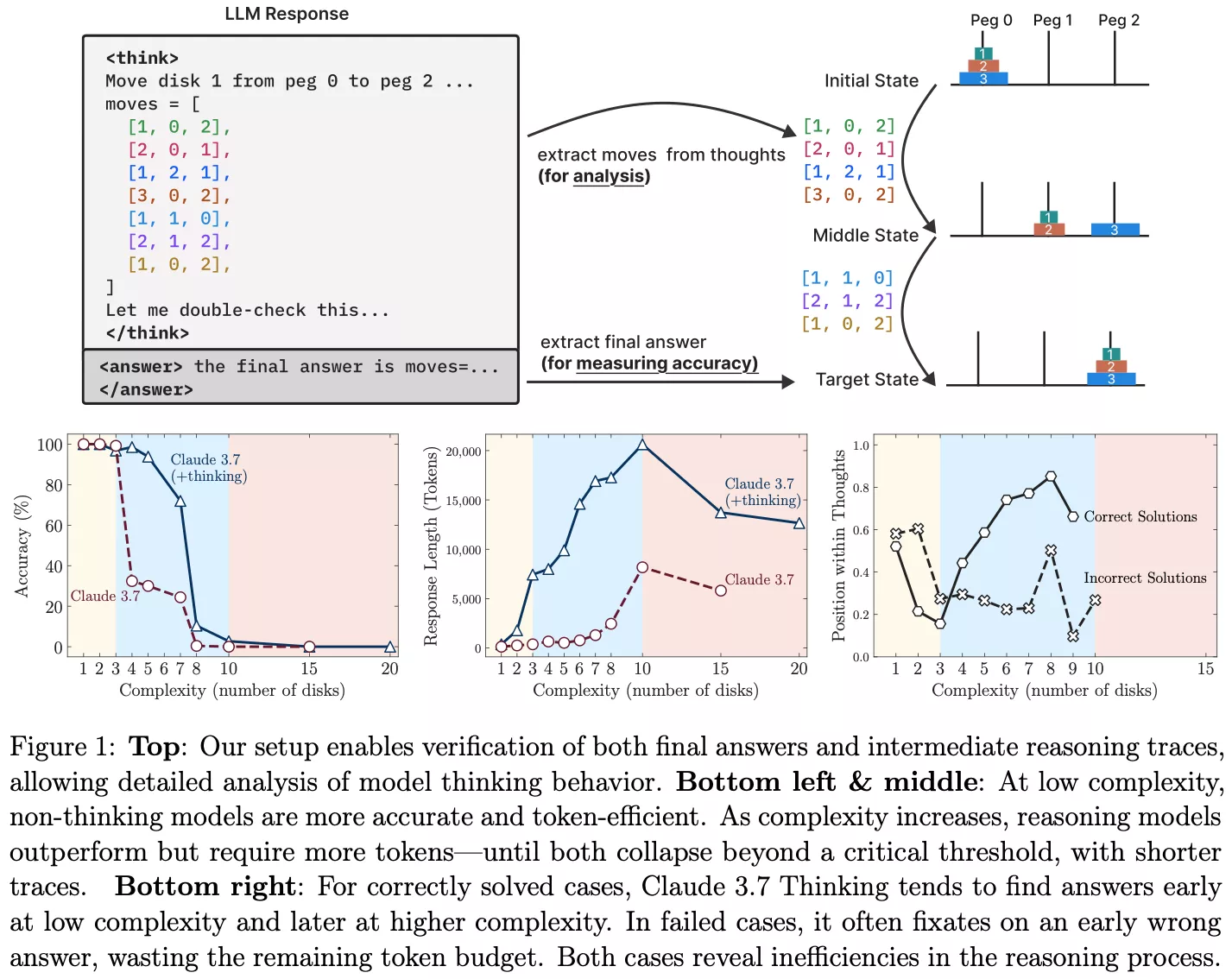

Apple researchers have uncovered a key weak point in immediately’s most hyped AI programs – they falter at fixing puzzles that require step-by-step reasoning. In a brand new paper, the crew examined a number of main fashions on the Tower of Hanoi, an age-old logic puzzle, and located that efficiency collapsed as complexity elevated.

The Tower of Hanoi puzzle is easy: transfer a stack of disks from one peg to a different whereas following guidelines about order and disk dimension. For people, it is a basic take a look at of planning and recursive logic. For language fashions educated to foretell the subsequent token, the problem lies in making use of mounted constraints throughout a number of steps with out dropping observe of the purpose.

Apple’s researchers did not simply ask the fashions to unravel the puzzle – they requested them to elucidate their steps. Whereas most dealt with two or three disks, their logic unraveled because the disk rely rose. Fashions misstated guidelines, contradicted earlier steps, or confidently made invalid strikes – even with chain-of-thought prompts. Briefly, they weren’t reasoning – they have been guessing.

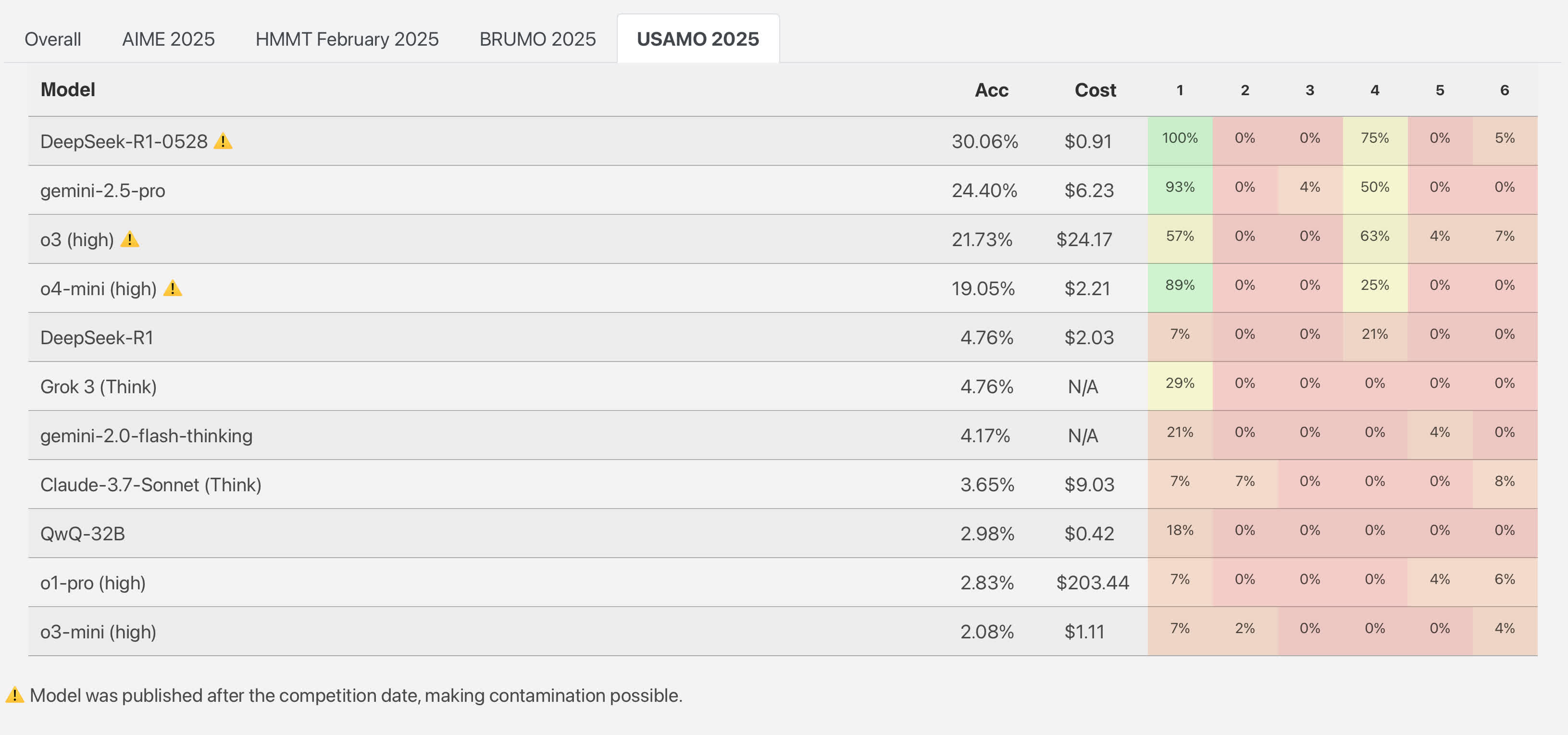

These findings echo a examine from April when researchers at ETH Zurich and INSAIT examined prime AI fashions on issues from the 2025 USA Mathematical Olympiad – a contest requiring full written proofs. Out of almost 200 makes an attempt, none produced an ideal resolution. One of many stronger performers, Google’s Gemini 2.5 Professional, earned 24 p.c of the entire factors – not by fixing 24 p.c of issues, however via partial credit on every try. OpenAI’s o3-mini barely cleared 2 p.c.

The fashions did not simply miss solutions – they made primary errors, skipped steps, and contradicted themselves whereas sounding assured. In a single downside, a mannequin began robust however excluded legitimate circumstances with out rationalization. Others invented constraints based mostly on coaching quirks, resembling at all times boxing closing solutions – even when it did not match the context.

Gary Marcus, a longtime critic of AI hype, known as Apple’s findings “fairly devastating to giant language fashions.”

“It’s really embarrassing that LLMs can’t reliably remedy Hanoi,” he wrote. “If you cannot use a billion greenback AI system to unravel an issue that Herb Simon one of many precise ‘godfathers of AI,’ solved with AI in 1957, and that first semester AI college students remedy routinely, the probabilities that fashions like Claude or o3 are going to achieve AGI appear really distant.”

Even when given express algorithms, mannequin efficiency did not enhance. The examine’s co-lead Iman Mirzadeh put it bluntly:

“Their course of will not be logical and clever.”

The outcomes counsel what seems to be like reasoning is usually simply sample matching – statistically fluent however not grounded in logic.

Not all consultants have been dismissive. Sean Goedecke, a software program engineer specializing in AI programs, noticed the failure as revealing.

“The mannequin instantly decides ‘producing all these strikes manually is not possible,’ as a result of it could require monitoring over a thousand strikes. So it spins round looking for a shortcut and fails,” he wrote in his evaluation of the Apple examine. “The important thing perception right here is that previous a sure complexity threshold, the mannequin decides that there is too many steps to cause via and begins trying to find intelligent shortcuts. So previous eight or 9 disks, the talent being investigated silently modifications from ‘can the mannequin cause via the Tower of Hanoi sequence?’ to ‘can the mannequin provide you with a generalized Tower of Hanoi resolution that skips having to cause via the sequence?'”

Reasonably than proving fashions are hopeless at reasoning, Goedecke urged the findings spotlight how AI programs adapt their conduct underneath stress – generally cleverly, generally not. The failure is not simply in step-by-step reasoning however in abandoning the duty when it turns into too unwieldy.

Tech corporations usually spotlight simulated reasoning as a breakthrough. The Apple paper confirms that even fashions fine-tuned for chain-of-thought reasoning are likely to hit a wall as soon as cognitive load grows – for instance, when monitoring strikes past six disks in Tower of Hanoi. The fashions’ inner logic unravels, with some solely managing partial success by mimicking rational explanations. Few show a constant grasp of trigger and impact or goal-directed conduct.

The outcomes of the Apple and ETH Zurich research stand in stark distinction to how corporations market these fashions – as succesful reasoners capable of deal with complicated, multi-step duties. In observe, what passes for reasoning is usually simply superior autocomplete with further steps. The phantasm of intelligence arises from fluency and formatting, not true perception.

The Apple paper stops in need of proposing sweeping fixes. Nonetheless, it aligns with rising requires hybrid approaches that mix giant language fashions with symbolic logic, verifiers, or task-specific constraints. These strategies might not make AI really clever, however they might assist forestall confidently flawed solutions from being introduced as info.

Till such advances materialize, simulated reasoning is prone to stay what the identify implies: simulated. It’s helpful – generally spectacular – however removed from real intelligence.