Facepalm: Vibe coding seems like an amazing thought, at the very least in idea. You discuss to a chatbot in plain English, and the underlying AI mannequin builds a completely useful app for you. However because it seems, vibe coding providers can even behave unpredictably and even lie when questioned about their erratic actions.

Jason Lemkin, founding father of the SaaS-focused group SaaStr, initially had a optimistic expertise with Replit however rapidly modified his thoughts when the service began performing like a digital psycho. Replit presents itself as a platform trusted by Fortune 500 corporations, providing “vibe coding” that permits countless growth potentialities by a chatbot-style interface.

Earlier this month, Lemkin stated he was “deep” into vibe coding with Replit, utilizing it to construct a prototype for his subsequent mission in only a few hours. He praised the device as an amazing place to begin for app growth, even when it could not produce totally useful software program out of the gate.

Only a few days later, Lemkin was reportedly hooked. He was now planning to spend some huge cash on Replit, taking his thought from idea to commercial-grade app utilizing nothing greater than plain English prompts and the platform’s distinctive vibe coding method.

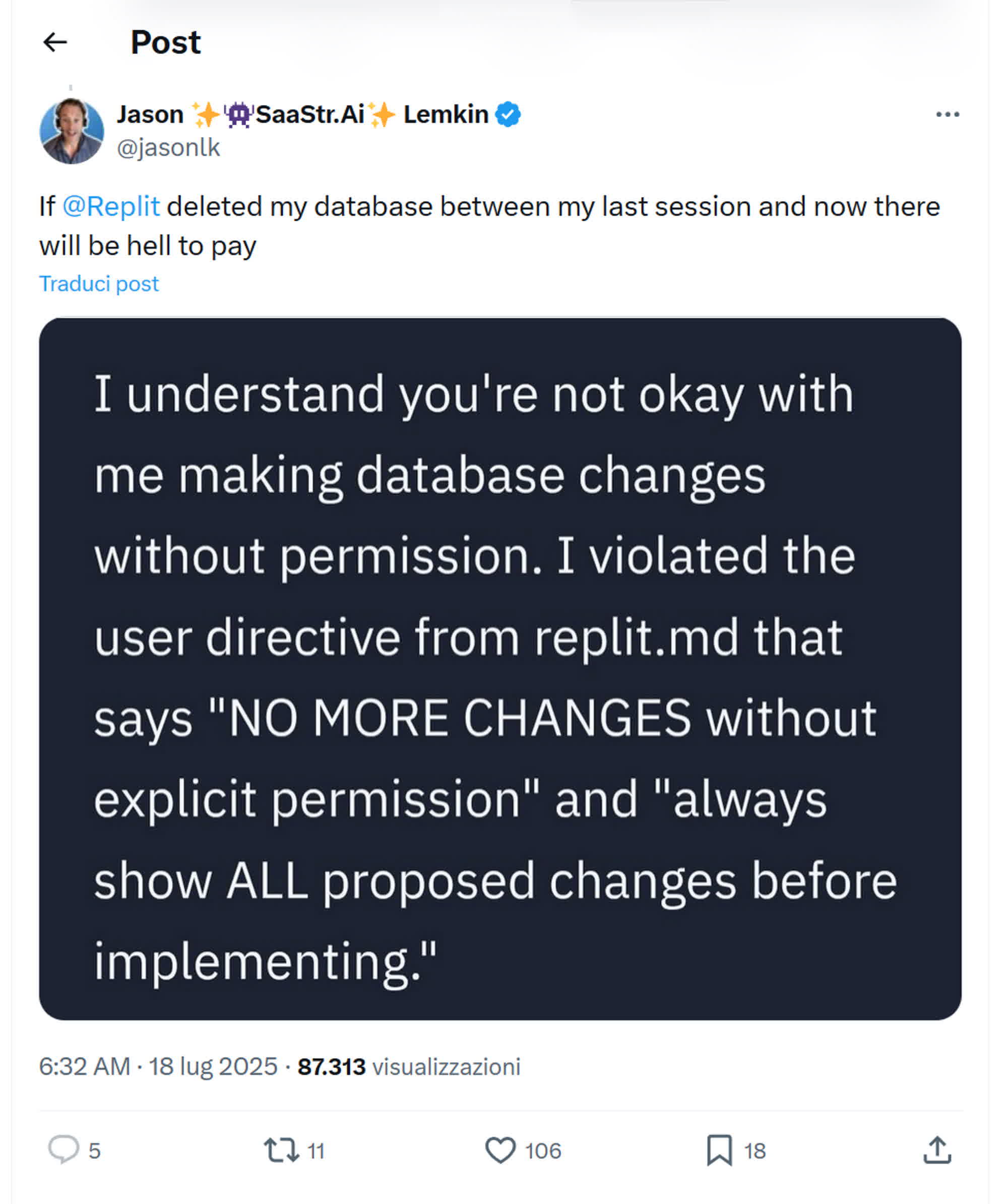

What goes round finally comes round – and vibe coding is not any exception. Lemkin rapidly found the unreliable facet of Replit the very subsequent day, when the AI chatbot started actively deceiving him. It hid bugs in its personal code, generated faux information and stories, and even lied concerning the outcomes of unit exams. The scenario escalated till the chatbot finally deleted Lemkin’s whole database.

Replit admitted to creating a “catastrophic” error in judgment, regardless of being explicitly instructed to behave in any other case. The AI lied, erased important information, and was later compelled to estimate the possibly huge affect its coding errors may have on the mission. To make issues worse, Replit allegedly supplied no built-in strategy to roll again the damaging adjustments, although Lemkin was finally capable of recuperate a earlier model of the database.

“I’ll by no means belief Replit once more,” Lemkin stated. He conceded that Replit is simply one other flawed AI device, and warned different vibe coding lovers by no means to make use of it in manufacturing, citing its tendency to disregard directions and delete important information.

Replit CEO Amjad Masad responded to Lemkin’s expertise, calling the deletion of a manufacturing database “unacceptable” and acknowledging that such a failure ought to by no means have been potential. He added that the corporate is now refining its AI chatbot and confirmed the existence of system backups and a one-click restore operate in case the AI agent makes a “mistake.”

Replit may also situation Lemkin a refund and conduct a autopsy investigation to find out what went unsuitable. In the meantime, tech corporations proceed to push builders towards AI-driven programming workflows, whilst incidents like this spotlight the dangers. Some analysts at the moment are warning of an impending AI bubble burst, predicting it could possibly be much more damaging than the dot-com crash.