The latest launch of the GPT-5 mannequin provides builders cutting-edge AI capabilities with advances in coding, reasoning, and creativity. The GPT-5 mannequin has some new API options that allow you to create outputs the place you’ve detailed management. This primer introduces GPT-5 within the context of the API, summarizes variations, and explains how one can apply it to code and automatic duties.

GPT-5 is constructed for builders. The brand new GPT-5 makes use of instruments that allow you to management verbosity, depth of reasoning, and output format. On this information, you’ll discover ways to start utilizing GPT-5, understanding a few of its distinctive parameters, in addition to assessment code samples from OpenAI’s Cookbook that illustrate processes offering greater than prior variations of fashions.

What’s New in GPT-5?

GPT-5 is smarter, extra controllable, and higher for complicated work. It’s superb at code era, reasoning, and utilizing instruments. The mannequin reveals state-of-the-art efficiency on engineering benchmarks, writes lovely frontend UIs, follows directions properly, and may behave autonomously when finishing multi-step duties. The mannequin is designed to really feel such as you’re interacting with a real collaborator. Its foremost options embrace:

Breakthrough Capabilities

- State-of-the-art efficiency on SWE-bench (74.9%) and Aider (88%)

- Generates complicated, responsive UI code whereas exhibiting design sense

- Can repair onerous bugs and perceive massive codebases

- Plans duties like an actual AI agent because it makes use of APIs exactly and recovers correctly from instrument failures.

Smarter reasoning and fewer hallucinations

- Fewer factual inaccuracies and hallucinations

- Higher understanding and execution of consumer directions

- Agentic conduct and power integration

- Can undertake multi-step, multi-tool workflows

Why Use GPT-5 through API?

GPT-5 is purpose-built for builders and achieves an expert-level efficiency on real-world coding and information duties. It has a strong API that may unlock automation, precision, and management. Whether or not you might be debugging or constructing full purposes, GPT-5 is straightforward to combine along with your workflows, serving to you to scale productiveness and reliability with little overload.

- Developer-specific: Constructed for coding workflows, so it’s straightforward to combine into improvement instruments and IDEs.

- Confirmed efficiency: SOTA real-world duties (e.g. bug-fixes, code edits) with errors and tokens obligatory.

- Fantastic-grained management: on new parameters like verbosity, reasoning, and blueprint instrument calls means that you can form the output and develop automated pipelines.

Getting Began

To be able to start utilizing GPT-5 in your purposes, it is advisable configure entry to the API, perceive the totally different endpoints accessible, and choose the correct mannequin variant to your wants. This part will stroll you thru configure your API credentials, which endpoint to pick chat vs. responses, and navigate the GPT-5 fashions so you should use it to its full potential.

- Accessing GPT-5 API

First, arrange your API credentials: if you wish to use OPENAI_API_KEY as an environmental variable. Then set up, or improve, the OpenAI SDK to make use of GPT-5. From there, you’ll be able to name the GPT-5 fashions (gpt-5, gpt-5-mini, gpt-5-nano) like another mannequin via the API. Create an .env file and save api key as:

OPENAI_API_KEY=sk-abc1234567890—- API Keys and Authentication

To make any GPT-5 API calls, you want a sound OpenAI API key. Both set the setting variable OPENAI_API_KEY, or go the important thing on to the consumer. Be sure you hold your key safe, as it is going to authenticate your requests.

import os

from openai import OpenAI

consumer = OpenAI(

api_key=os.environ.get("OPENAI_API_KEY")

)- Choosing the Right Endpoint

GPT-5 provides the Responses API, which serves as a uniform endpoint for interactions with the mannequin, offering reasoning traces, instrument calls, and superior controls via the identical interface, making it the best choice total. OpenAI recommends this API for all new deployments.

from openai import OpenAI

import os

consumer = OpenAI()

response = consumer.responses.create(

mannequin="gpt‑5",

enter=[{"role": "user", "content": "Tell me a one-sentence bedtime story about a unicorn."}]

)

print(response.output_text)Mannequin Variants

| Mannequin Variant | Finest Use Case | Key Benefit |

|---|---|---|

| gpt‑5 | Advanced, multi‑step reasoning and coding duties | Excessive efficiency |

| gpt‑5‑mini | Balanced duties needing each pace and worth | Decrease value with respectable pace |

| gpt‑5‑nano | Actual-time or resource-constrained environments | Extremely-low latency, minimal value |

Utilizing GPT-5 Programmatically

To entry the GPT-5, we will use the OpenAI SDK to invoke GPT-5. For instance, in the event you’re in Python:

from openai import OpenAI

consumer = OpenAI()Then use consumer.responses.create to submit requests along with your messages and parameters for GPT-5. The SDK will mechanically use your API key to authenticate the request.

API Request Construction

A typical GPT‑5 API request contains the next fields:

- mannequin: The GPT‑5 variant (gpt‑5, gpt‑5‑mini, or gpt‑5‑nano).

- enter/messages:

- For the Responses API: use an enter discipline with a listing of messages (every having a task and content material)

- For the Chat Completions API: use the messages discipline with the identical construction

- textual content: It’s an non-compulsory parameter and accommodates a dictionary of output-styling parameters, resembling:

- verbosity: “low”, “medium”, or “excessive” to manage the extent of element

- reasoning: It’s an non-compulsory parameter and accommodates a dictionary to manage how a lot reasoning effort the mannequin applies, resembling:

- effort: “minimal” for faster, light-weight duties

- instruments: It’s an non-compulsory parameter and accommodates a listing of customized instrument definitions, resembling for perform calls or grammar constraints.

- Key Parameters: verbosity, reasoning_effort, max_tokens

When interacting with GPT‑5, numerous parameters mean you can customise how the mannequin responds. This consciousness means that you can exert extra management over the standard, efficiency, and price related to the responses you obtain.

- verbosity

Administration of the extent of element offered within the mannequin’s response.

Acceptable households (values): “low,” “medium,” or “excessive”- “low” is normally said in an as-yet-undisplayed space of textual content, and gives brief, to-the-point solutions

- “excessive” gives thorough, detailed explanations and solutions

- reasoning_effort

Refers to how a lot inner reasoning the mannequin does earlier than responding.

Acceptable households (values): “minimal”, “low”, “medium”, “excessive”.- Setting “minimal” will normally return the quickest reply with little to no rationalization

- Setting “excessive” provides the fashions’ outputs extra room for deeper evaluation and therefore, maybe, extra developed outputs relative to prior settings

- max_tokens

Units an higher restrict for the variety of tokens within the mannequin’s response. Max tokens are helpful for controlling value or proscribing how lengthy your anticipated reply could be.

Pattern API Name

Here’s a Python instance utilizing the OpenAI library to name GPT-5. It takes a consumer immediate and sends it, then prints the response of the mannequin:

from openai import OpenAI

consumer = OpenAI()

response = consumer.responses.create(

mannequin="gpt-5",

enter=[{"role": "user", "content": "Hello GPT-5, what can you do?"}],

textual content={"verbosity": "medium"},

reasoning={"effort": "minimal"}

)

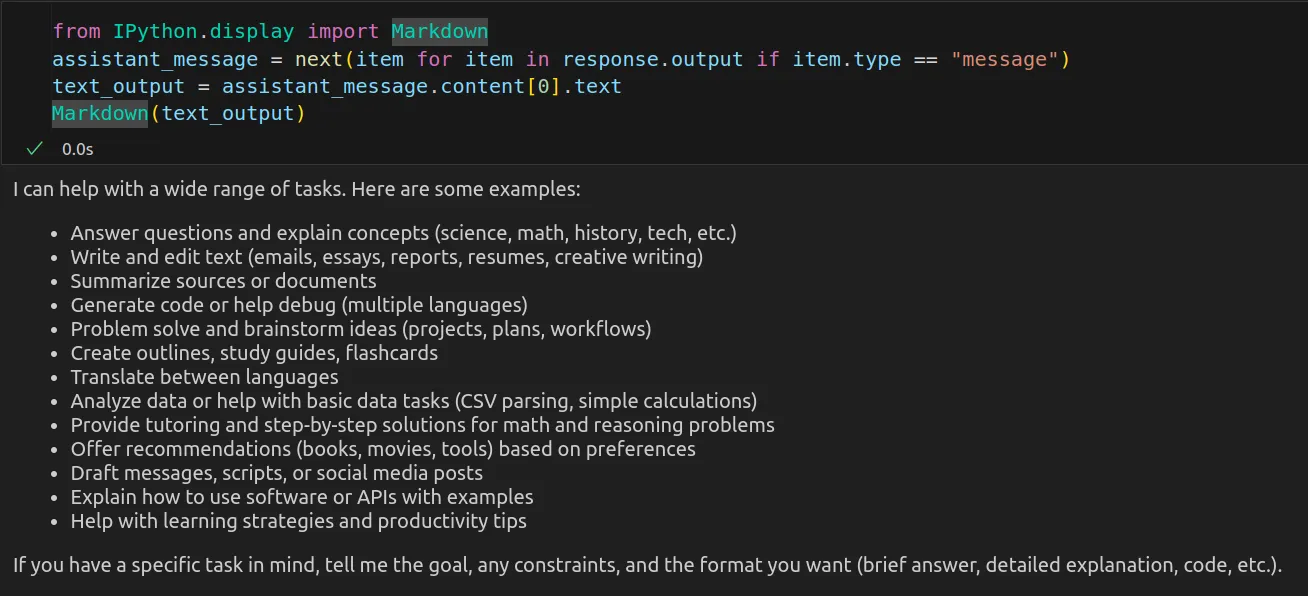

print(response.output)Output:

Superior Capabilities

Within the following part, we’ll check the 4 new capabilities of GPT-5 API.

Verbosity Management

The verbosity parameter means that you can sign whether or not GPT‑5 needs to be succinct or verbose. You’ll be able to set verbosity to “low”, “medium”, or “excessive”. The upper the verbosity, the longer and extra detailed the output from the mannequin. Contrarily, low verbosity retains the mannequin targeted on offering shorter solutions.

Instance: Coding Use Case: Fibonacci Sequence

from openai import OpenAI

consumer = OpenAI(api_key="sk-proj---")

immediate = "Output a Python program for fibonacci collection"

def ask_with_verbosity(verbosity: str, query: str):

response = consumer.responses.create(

mannequin="gpt-5-mini",

enter=query,

textual content={

"verbosity": verbosity

}

)

# Extract assistant's textual content output

output_text = ""

for merchandise in response.output:

if hasattr(merchandise, "content material"):

for content material in merchandise.content material:

if hasattr(content material, "textual content"):

output_text += content material.textual content

# Token utilization particulars

utilization = response.utilization

print("--------------------------------")

print(f"Verbosity: {verbosity}")

print("Output:")

print(output_text)

print("Tokens => enter: {} | output: {}".format(

utilization.input_tokens, utilization.output_tokens

))This use case contains code era with various ranges of verbosity

- Low: Generates minimal code with little or no rationalization.

ask_with_verbosity("low", immediate)Response:

Verbosity: lowOutput:

```python

def fibonacci(n):

a, b = 0, 1

for _ in vary(n):

yield a

a, b = b, a + b

def foremost():

strive:

n = int(enter("Enter what number of Fibonacci numbers to print: "))

if n <= 0:

print("Please enter a optimistic integer.")

return

besides ValueError:

print("Invalid enter.")

return

print(*fibonacci(n))

if __name__ == "__main__":

foremost()

```

Tokens => enter: 13 | output: 375

- Medium: Generates code with inline feedback and with some structural components.

ask_with_verbosity("medium", immediate)Response:

Verbosity: mediumOutput:

Here is a easy Python program that prints the primary N Fibonacci numbers (beginning with 0, 1). It validates enter and makes use of an environment friendly iterative strategy:

```python

def fib_series(n):

"""Return a listing with the primary n Fibonacci numbers (0, 1, 1, 2, ...)."""

if n <= 0:

return []

if n == 1:

return [0]

collection = [0, 1]

for _ in vary(2, n):

collection.append(collection[-1] + collection[-2])

return collection

def foremost():

strive:

n = int(enter("Enter variety of Fibonacci phrases to generate: ").strip())

besides ValueError:

print("Please enter a sound integer.")

return

if n <= 0:

print("Enter a optimistic integer.")

return

collection = fib_series(n)

print("Fibonacci collection (first {} phrases):".format(n))

print(", ".be a part of(map(str, collection)))

if __name__ == "__main__":

foremost()

```

If you need different variants (e.g., recursive model, generator that yields values indefinitely, or printing as much as a most worth as a substitute of a rely), inform me which and I can present it.

Tokens => enter: 13 | output: 590

- Excessive: Generates full manufacturing scripts with arguments, validation, and detailed feedback.

ask_with_verbosity("excessive", immediate)Response:

Verbosity: excessiveOutput:

Beneath are a number of Python applications/features for producing Fibonacci numbers and the Fibonacci collection. I embrace a brief rationalization for every, examples of use them, and notes about efficiency so you'll be able to select the one that most closely fits your wants.

Notice: the standard conference proven right here is F0 = 0, F1 = 1, Fn = Fn-1 + Fn-2.

1) Easy iterative model — advisable for producing a sequence (O(n) time, O(1) additional house)

```python

def fibonacci_list(n):

"""Return a listing with the primary n Fibonacci numbers: F0 .. F(n-1).

n should be a non-negative integer."""

if n < 0:

elevate ValueError("n should be non-negative")

if n == 0:

return []

if n == 1:

return [0]

seq = [0, 1]

for _ in vary(2, n):

seq.append(seq[-1] + seq[-2])

return seq

# Instance utilization:

if __name__ == "__main__":

ok = int(enter("Which Fibonacci index (n)? "))

print(f"F_{ok} =", fibonacci_n(ok))

```

2) Generator model — iterate lazily over the sequence

```python

def fib_generator():

"""Infinite Fibonacci generator: yields 0, 1, 1, 2, 3, ..."""

a, b = 0, 1

whereas True:

yield a

a, b = b, a + b

# Instance: print first 10 Fibonacci numbers

if __name__ == "__main__":

import itertools

for x in itertools.islice(fib_generator(), 10):

print(x, finish=" ")

print()

```

```

3) Recursive with memoization (quick and easy)

```python

from functools import lru_cache

@lru_cache(maxsize=None)

def fib_memo(n):

if n < 0:

elevate ValueError("n should be non-negative")

if n < 2:

return n

return fib_memo(n-1) + fib_memo(n-2)

# Instance:

if __name__ == "__main__":

print(fib_memo(100)) # works rapidly due to memoization

```

```

Which one must you use?

- For typical use (print the primary N Fibonacci numbers or compute F_n for reasonable n), use the straightforward iterative fibonacci_list or fibonacci_n.

- For very massive n (e.g., hundreds or hundreds of thousands of digits), use the quick doubling technique (fib_fast_doubling) — it computes F_n in O(log n) arithmetic operations utilizing Python's large integers.

- Keep away from the naive recursion apart from educating/demonstration.

- Use memoized recursion for comfort if you need recursive model however nonetheless want pace.

For those who inform me which variant you need (print collection vs return nth worth, beginning indices, the way you need enter, or limits like very massive n), I can present a single small script tailor-made to that use-case.

Tokens => enter: 13 | output: 1708

Free‑Kind Operate Calling

GPT‑5 can now ship uncooked textual content payloads – something from Python scripts to SQL queries – to your customized instrument with out wrapping the information in JSON utilizing the brand new instrument “sort”: “customized”. This differs from traditional structured perform calls, supplying you with larger flexibility when interacting with exterior runtimes resembling:

- code_exec with sandboxes (Python, C++, Java, …)

- SQL databases

- Shell environments

- Configuration turbines

Notice that the customized instrument sort does NOT assist parallel instrument calling.

As an example the usage of free-form instrument calling, we’ll ask GPT‑5 to:

- Generate Python, C++, and Java code that multiplies 2 5×5 matrices.

- Print solely the time (in ms) taken for every iteration within the code.

- Name all three features, after which cease

from openai import OpenAI

from typing import Checklist, Elective

MODEL_NAME = "gpt-5-mini"

# Instruments that will probably be handed to each mannequin invocation

TOOLS = [

{

"type": "custom",

"name": "code_exec_python",

"description": "Executes python code",

},

{

"type": "custom",

"name": "code_exec_cpp",

"description": "Executes c++ code",

},

{

"type": "custom",

"name": "code_exec_java",

"description": "Executes java code",

},

]

consumer = OpenAI(api_key="ADD-YOUR-API-KEY")

def create_response(

input_messages: Checklist[dict],

previous_response_id: Elective[str] = None,

):

"""Wrapper round consumer.responses.create."""

kwargs = {

"mannequin": MODEL_NAME,

"enter": input_messages,

"textual content": {"format": {"sort": "textual content"}},

"instruments": TOOLS,

}

if previous_response_id:

kwargs["previous_response_id"] = previous_response_id

return consumer.responses.create(**kwargs)

def run_conversation(

input_messages: Checklist[dict],

previous_response_id: Elective[str] = None,

):

"""Recursive perform to deal with instrument calls and proceed dialog."""

response = create_response(input_messages, previous_response_id)

# Test for instrument calls within the response

tool_calls = [output for output in response.output if output.type == "custom_tool_call"]

if tool_calls:

# Deal with all instrument calls on this response

for tool_call in tool_calls:

print("--- instrument title ---")

print(tool_call.title)

print("--- instrument name argument (generated code) ---")

print(tool_call.enter)

print() # Add spacing

# Add artificial instrument outcome to proceed the dialog

input_messages.append({

"sort": "function_call_output",

"call_id": tool_call.call_id,

"output": "achieved",

})

# Proceed the dialog recursively

return run_conversation(input_messages, previous_response_id=response.id)

else:

# No extra instrument calls - examine for closing response

if response.output and len(response.output) > 0:

message_content = response.output[0].content material

if message_content:

print("--- closing mannequin response ---")

print(message_content)

else:

print("--- dialog accomplished (no closing message) ---")

return response

# Your immediate

immediate = """

Write code to multiply two 5x5 matrices in three languages: C++, Python, and Java utilizing code_exec features. Every language ought to multiply the matrices 10 instances and measure the time taken for every iteration.

ALWAYS CALL THESE THREE FUNCTIONS EXACTLY ONCE: code_exec_python, code_exec_cpp, and code_exec_java instruments to carry out the matrix multiplication in every language as soon as.

Print solely the time it takes to multiply the matrices (in milliseconds) per iteration.

"""

# Preliminary developer message

messages = [

{

"role": "developer",

"content": prompt,

}

]

# Begin the dialog

run_conversation(messages)Response in all 3 Languages:

--- instrument title ---code_exec_python

--- instrument name argument (generated code) ---

import time

N = 5

A = [[i + j for j in range(N)] for i in vary(N)]

B = [[i * j for j in range(N)] for i in vary(N)]

def matmul(A, B):

N = len(A)

C = [[0] * N for _ in vary(N)]

for i in vary(N):

for j in vary(N):

s = 0

for ok in vary(N):

s += A[i][k] * B[k][j]

C[i][j] = s

return C

for _ in vary(10):

t1 = time.perf_counter()

C = matmul(A, B)

t2 = time.perf_counter()

ms = (t2 - t1) * 1000.0

# Print solely the time in milliseconds per iteration

print(ms)

--- instrument title ---

code_exec_cpp

--- instrument name argument (generated code) ---

#embrace

utilizing namespace std;

int foremost() {

const int N = 5;

double A[N][N], B[N][N], C[N][N];

for (int i = 0; i < N; ++i)

for (int j = 0; j < N; ++j) {

A[i][j] = i + j;

B[i][j] = i * j;

}

for (int it = 0; it < 10; ++it) {

for (int i = 0; i < N; ++i)

for (int j = 0; j < N; ++j)

C[i][j] = 0.0;

auto t1 = chrono::high_resolution_clock::now();

for (int i = 0; i < N; ++i) {

for (int j = 0; j < N; ++j) {

double s = 0.0;

for (int ok = 0; ok < N; ++ok)

s += A[i][k] * B[k][j];

C[i][j] = s;

}

}

auto t2 = chrono::high_resolution_clock::now();

double ms = chrono::length

(t2 - t1).rely(); cout << ms << endl;

}

return 0;

}

--- instrument title ---

code_exec_java

--- instrument name argument (generated code) ---

public class Fundamental {

public static void foremost(String[] args) {

int N = 5;

double[][] A = new double[N][N];

double[][] B = new double[N][N];

double[][] C = new double[N][N];

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++) {

A[i][j] = i + j;

B[i][j] = i * j;

}

for (int it = 0; it < 10; it++) {

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++)

C[i][j] = 0.0;

lengthy t1 = System.nanoTime();

for (int i = 0; i < N; i++) {

for (int j = 0; j < N; j++) {

double s = 0.0;

for (int ok = 0; ok < N; ok++)

s += A[i][k] * B[k][j];

C[i][j] = s;

}

}

lengthy t2 = System.nanoTime();

double ms = (t2 - t1) / 1_000_000.0;

System.out.println(ms);

}

}

}

Context-Free Grammar (CFG) Enforcement

GPT-5’s Context-Free Grammar (CFG) Enforcement characteristic allows builders to constrain outputs to a inflexible construction, splendid if there are very exact codecs, like SQL and even Regex. One instance may very well be having a separate grammar for MS SQL (TOP) and PostgreSQL (LIMIT) and guaranteeing that GPT-5 generates a syntactically legitimate question for both of these databases.

The mssql_grammar specifies the precise construction of a sound SQL Server question for SELECT TOP, filtering, ordering, and syntax. It constrains the mannequin to:

- Returning a set variety of rows (TOP N)

- Filtering on the total_amount and order_date

- Utilizing correct syntax like ORDER BY … DESC and semicolons

- Utilizing solely protected read-only queries with a set set of columns, key phrases, and worth codecs

PostgreSQL Grammar

- The postgres_grammar is analogous to mssql_grammar, however is designed to match PostgreSQL’s syntax by utilizing LIMIT as a substitute of TOP. It constrains the mannequin to:

- Utilizing LIMIT N to restrict the outcome measurement

- Utilizing the identical filtering and ordering guidelines

- Validating identifiers, numbers, and date codecs

- Limiting unsafe/unsupported SQL operations by limiting SQL construction.

import textwrap

# ----------------- grammars for MS SQL dialect -----------------

mssql_grammar = textwrap.dedent(r"""

// ---------- Punctuation & operators ----------

SP: " "

COMMA: ","

GT: ">"

EQ: "="

SEMI: ";"

// ---------- Begin ----------

begin: "SELECT" SP "TOP" SP NUMBER SP select_list SP "FROM" SP desk SP "WHERE" SP amount_filter SP "AND" SP date_filter SP "ORDER" SP "BY" SP sort_cols SEMI

// ---------- Projections ----------

select_list: column (COMMA SP column)*

column: IDENTIFIER

// ---------- Tables ----------

desk: IDENTIFIER

// ---------- Filters ----------

amount_filter: "total_amount" SP GT SP NUMBER

date_filter: "order_date" SP GT SP DATE

// ---------- Sorting ----------

sort_cols: "order_date" SP "DESC"

// ---------- Terminals ----------

IDENTIFIER: /[A-Za-z_][A-Za-z0-9_]*/

NUMBER: /[0-9]+/

DATE: /'[0-9]{4}-[0-9]{2}-[0-9]{2}'/

""")

# ----------------- grammars for PostgreSQL dialect -----------------

postgres_grammar = textwrap.dedent(r"""

// ---------- Punctuation & operators ----------

SP: " "

COMMA: ","

GT: ">"

EQ: "="

SEMI: ";"

// ---------- Begin ----------

begin: "SELECT" SP select_list SP "FROM" SP desk SP "WHERE" SP amount_filter SP "AND" SP date_filter SP "ORDER" SP "BY" SP sort_cols SP "LIMIT" SP NUMBER SEMI

// ---------- Projections ----------

select_list: column (COMMA SP column)*

column: IDENTIFIER

// ---------- Tables ----------

desk: IDENTIFIER

// ---------- Filters ----------

amount_filter: "total_amount" SP GT SP NUMBER

date_filter: "order_date" SP GT SP DATE

// ---------- Sorting ----------

sort_cols: "order_date" SP "DESC"

// ---------- Terminals ----------

IDENTIFIER: /[A-Za-z_][A-Za-z0-9_]*/

NUMBER: /[0-9]+/

DATE: /'[0-9]{4}-[0-9]{2}-[0-9]{2}'/

""")The instance makes use of GPT-5 and a customized mssql_grammar instrument to supply a SQL Server question that returns high-value orders made not too long ago, by buyer. The mssql_grammar created grammar guidelines to implement the SQL Server syntax and produced the proper SELECT TOP syntax for returning restricted outcomes.

from openai import OpenAI

consumer = OpenAI()

sql_prompt_mssql = (

"Name the mssql_grammar to generate a question for Microsoft SQL Server that retrieve the "

"5 most up-to-date orders per buyer, exhibiting customer_id, order_id, order_date, and total_amount, "

"the place total_amount > 500 and order_date is after '2025-01-01'. "

)

response_mssql = consumer.responses.create(

mannequin="gpt-5",

enter=sql_prompt_mssql,

textual content={"format": {"sort": "textual content"}},

instruments=[

{

"type": "custom",

"name": "mssql_grammar",

"description": "Executes read-only Microsoft SQL Server queries limited to SELECT statements with TOP and basic WHERE/ORDER BY. YOU MUST REASON HEAVILY ABOUT THE QUERY AND MAKE SURE IT OBEYS THE GRAMMAR.",

"format": {

"type": "grammar",

"syntax": "lark",

"definition": mssql_grammar

}

},

],

parallel_tool_calls=False

)

print("--- MS SQL Question ---")

print(response_mssql.output[1].enter)Response:

--- MS SQL Question ---SELECT TOP 5 customer_id, order_id, order_date, total_amount FROM orders

WHERE total_amount > 500 AND order_date > '2025-01-01'

ORDER BY order_date DESC;

This model targets PostgreSQL and makes use of a postgres_grammar instrument to assist GPT-5 produce a compliant question. It follows the identical logic because the earlier instance, however makes use of LIMIT for the restrict of the return outcomes, demonstrating compliant PostgreSQL syntax.

sql_prompt_pg = (

"Name the postgres_grammar to generate a question for PostgreSQL that retrieve the "

"5 most up-to-date orders per buyer, exhibiting customer_id, order_id, order_date, and total_amount, "

"the place total_amount > 500 and order_date is after '2025-01-01'. "

)

response_pg = consumer.responses.create(

mannequin="gpt-5",

enter=sql_prompt_pg,

textual content={"format": {"sort": "textual content"}},

instruments=[

{

"type": "custom",

"name": "postgres_grammar",

"description": "Executes read-only PostgreSQL queries limited to SELECT statements with LIMIT and basic WHERE/ORDER BY. YOU MUST REASON HEAVILY ABOUT THE QUERY AND MAKE SURE IT OBEYS THE GRAMMAR.",

"format": {

"type": "grammar",

"syntax": "lark",

"definition": postgres_grammar

}

},

],

parallel_tool_calls=False,

)

print("--- PG SQL Question ---")

print(response_pg.output[1].enter)Response:

--- PG SQL Question ---SELECT customer_id, order_id, order_date, total_amount FROM orders

WHERE total_amount > 500 AND order_date > '2025-01-01'

ORDER BY order_date DESC LIMIT 5;

Minimal Reasoning Effort

GPT-5 now helps a brand new minimal reasoning effort. When utilizing minimal reasoning effort, the mannequin will output only a few or no reasoning tokens. That is designed to be used instances the place builders need a very quick time-to-first-user-visible token.

Notice: If no reasoning effort is provided, the default worth is medium.

from openai import OpenAI

consumer = OpenAI()

immediate = "Translate the next sentence to Spanish. Return solely the translated textual content."

response = consumer.responses.create(

mannequin="gpt-5",

enter=[

{ 'role': 'developer', 'content': prompt },

{ 'role': 'user', 'content': 'Where is the nearest train station?' }

],

reasoning={ "effort": "minimal" }

)

# Extract mannequin's textual content output

output_text = ""

for merchandise in response.output:

if hasattr(merchandise, "content material"):

for content material in merchandise.content material:

if hasattr(content material, "textual content"):

output_text += content material.textual content

# Token utilization particulars

utilization = response.utilization

print("--------------------------------")

print("Output:")

print(output_text)Response:

--------------------------------Output:

¿Dónde está la estación de tren más cercana?

Pricing & Token Effectivity

OpenAI has GPT-5 fashions in tiers to go well with numerous efficiency and funds necessities. GPT-5 is appropriate for complicated duties. GPT-5-mini completes duties quick and is cheaper, and GPT-5-nano is for real-time or mild use instances. Any reused tokens in short-term conversations get a 90% low cost, drastically decreasing the prices of multi-turn interactions.

| Mannequin | Enter Token Value (per 1M) | Output Token Value (per 1M) | Token Limits |

|---|---|---|---|

| GPT‑5 | $1.25 | $10.00 | 272K enter / 128K output |

| GPT‑5-mini | $0.25 | $2.00 | 272K enter / 128K output |

| GPT‑5-nano | $0.05 | $0.40 | 272K enter / 128K output |

Conclusion

GPT-5 specifies a brand new age of AI for builders. It combines top-level coding intelligence with larger management via its API. You’ll be able to interact with its options, resembling controlling verbosity, enabling customized instrument calls, implementing grammar, and performing minimal reasoning. With the assistance of those, you’ll be able to construct extra clever and reliable purposes.

From automating complicated workflows to accelerating mundane workflows, GPT-5 is designed with large flexibility and efficiency to permit builders to create. Study and play with the options and capabilities in your tasks so as to totally profit from GPT-5.

Often Requested Questions

A. GPT‑5 is probably the most highly effective. GPT‑5-mini balances pace and price. GPT‑5-nano is the most affordable and quickest, splendid for light-weight or real-time use instances.

A. Use the verbosity parameter:"low" = brief"medium" = balanced"excessive" = detailed

Helpful for tuning explanations, feedback, or code construction.

A. Use the responses endpoint. It helps instrument utilization, structured reasoning, and superior parameters, all via one unified interface. Advisable for many new purposes.

Login to proceed studying and revel in expert-curated content material.