Agentic AI methods act as autonomous digital staff for performing complicated duties with minimal supervision. They’re at the moment rising with a fast attraction, to the purpose that one estimate surmises that by 2025, 35% of companies will implement AI brokers. Nevertheless, autonomy raises issues in high-stakes even refined errors in these fields can have severe penalties. Therefore, it makes folks consider that human suggestions in Agentic AI ensures security, accountability, and belief.

The human-in-the-loop-validation (HITL) strategy is one collaborative design wherein people validate or affect an AI’s outputs. Human checkpoints catch errors earlier and preserve the system oriented towards human values, which in flip helps higher compliance and belief in direction of the agentic AI. It acts as a security internet for complicated duties. On this article, we’ll examine workflows with and with out HITL for instance these trade-offs.

Human-in-the-Loop: Idea and Advantages

Human-in-the-Loop (HITL) is a design sample the place an AI workflow explicitly contains human judgment at key factors. The AI might generate a provisional output and pause to let the human evaluation, approve, or edit this output. In such a workflow, the human evaluation step is interposed between the AI element and the ultimate output.

Advantages of Human Validation

- Error discount and accuracy: Human-in-the-loop will evaluation the potential errors within the outputs offered by the AI and can fine-tune the output.

- Belief and accountability: Human validation makes a system understandable and accountable in its selections.

- Compliance and security: Human interpretation of legal guidelines and ethics ensures AI actions conform to rules and questions of safety.

When NOT to Use Human-in-the-Loop

- Routine or high-volume duties: People are a bottleneck when velocity issues. Externally, in such situations, the complete automation era could be simpler.

- Time-critical methods: Actual-time response can’t watch for human enter. As an example, fast content material filtering or reside alerts; HITL may maintain the system again.

What Makes the Distinction: Evaluating Two Situations

With out Human-in-the-Loop

Within the absolutely automated state of affairs, the agentic workflow proceeds autonomously. As quickly as enter is offered, the agent generates content material and takes the motion. For instance, an AI assistant may, in some circumstances, submit a consumer’s time-off request with out confirming. This advantages from the best velocity and potential scalability. In fact, the draw back is that nothing is checked by a human. There’s a conceptual distinction between an error made by a Human and an error made by an AI Agent. An agent may misread directions or carry out an undesired motion that would result in dangerous outcomes.

With Human-in-the-Loop

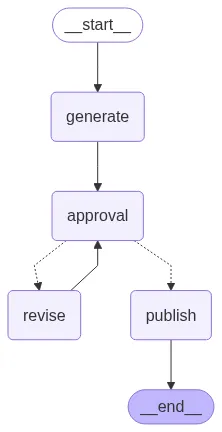

Within the HITL (human-in-the-loop) state of affairs, we’re inserting a Human step. After producing a tough draft, the agent stops and asks an individual to approve or make adjustments to the draft. If the draft meets approval, the agent publishes the content material. If the draft just isn’t authorised, the agent revises the draft based mostly on suggestions and circles again. This state of affairs affords a better diploma of accuracy and belief, since people can catch errors previous to finalizing. For instance, including a affirmation step shifts actions to scale back “unintended” actions and confirms that the agent didn’t misunderstand enter. The draw back to this, in fact, is that it requires extra time and human effort.

Instance Implementation in LangGraph

Under is an instance utilizing LangGraph and GPT-4o-mini. We outline two workflows: one absolutely automated and one with a human approval step.

State of affairs 1: With out Human-in-the-Loop

So, within the first state of affairs, we’ll create an agent with a easy workflow. It can take the consumer’s enter, like which subject we need to create the content material for or on which subject we need to write an article. After getting the consumer’s enter, the agent will use gpt-4o-mini to generate the response.

from langgraph.graph import StateGraph, END

from typing import TypedDict

from openai import OpenAI

from dotenv import load_dotenv

import os

load_dotenv()

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

# --- OpenAI shopper ---

shopper = OpenAI(api_key=OPENAI_API_KEY) # Exchange together with your key

# --- State Definition ---

class ArticleState(TypedDict):

draft: str

# --- Nodes ---

def generate_article(state: ArticleState):

immediate = "Write knowledgeable but participating 150-word article about Agentic AI."

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

state["draft"] = response.decisions[0].message.content material

print(f"n[Agent] Generated Article:n{state['draft']}n")

return state

def publish_article(state: ArticleState):

print(f"[System] Publishing Article:n{state['draft']}n")

return state

# --- Autonomous Workflow ---

def autonomous_workflow():

print("n=== Autonomous Publishing ===")

builder = StateGraph(ArticleState)

builder.add_node("generate", generate_article)

builder.add_node("publish", publish_article)

builder.set_entry_point("generate")

builder.add_edge("generate", "publish")

builder.add_edge("publish", END)

graph = builder.compile()

# Save diagram

with open("autonomous_workflow.png", "wb") as f:

f.write(graph.get_graph().draw_mermaid_png())

graph.invoke({"draft": ""})

if __name__ == "__main__":

autonomous_workflow()Code Implementation: This code units up a workflow with two nodes: generate_article and publish_article, linked sequentially. When run, it has the agent print its draft after which publish it instantly.

Agent Workflow Diagram

Agent Response

“””Agentic AI refers to superior synthetic intelligence methods that possess the flexibility to make autonomous selections based mostly on their setting and aims. In contrast to conventional AI, which depends closely on predefined algorithms and human enter, agentic AI can analyze complicated information, study from experiences, and adapt its conduct accordingly. This know-how harnesses machine studying, pure language processing, and cognitive computing to carry out duties starting from managing provide chains to personalizing consumer experiences.

The potential functions of agentic AI are huge, reworking industries reminiscent of healthcare, finance, and customer support. As an example, in healthcare, agentic AI can analyze affected person information to supply tailor-made remedy suggestions, resulting in improved outcomes. As companies more and more undertake these autonomous methods, moral concerns surrounding transparency, accountability, and job displacement turn out to be paramount. Embracing agentic AI affords alternatives to boost effectivity and innovation, nevertheless it additionally requires cautious contemplation of its societal impression. The way forward for AI isn't just about automation; it is about clever collaboration.

”””

State of affairs 2: With Human-in-the-Loop

On this state of affairs, first, we’ll create 2 instruments, revise_article_tool and publish_article_tool. The revise_article_tool will revise/change the article’s content material as per the consumer’s suggestions. As soon as the consumer is finished with the suggestions and glad with the agent response, simply by writing publish the 2nd device publish_article_tool, it’ll get executed, and it’ll present the ultimate article content material.

from langgraph.graph import StateGraph, END

from typing import TypedDict, Literal

from openai import OpenAI

from dotenv import load_dotenv

import os

load_dotenv()

# --- OpenAI shopper ---

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

shopper = OpenAI(api_key=OPENAI_API_KEY)

# --- State Definition ---

class ArticleState(TypedDict):

draft: str

authorised: bool

suggestions: str

selected_tool: str

# --- Instruments ---

def revise_article_tool(state: ArticleState):

"""Instrument to revise article based mostly on suggestions"""

immediate = f"Revise the next article based mostly on this suggestions: '{state['feedback']}'nnArticle:n{state['draft']}"

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

revised_content = response.decisions[0].message.content material

print(f"n[Tool: Revise] Revised Article:n{revised_content}n")

return revised_content

def publish_article_tool(state: ArticleState):

"""Instrument to publish the article"""

print(f"[Tool: Publish] Publishing Article:n{state['draft']}n")

print("Article efficiently printed!")

return state['draft']

# --- Out there Instruments Registry ---

AVAILABLE_TOOLS = {

"revise": revise_article_tool,

"publish": publish_article_tool

}

# --- Nodes ---

def generate_article(state: ArticleState):

immediate = "Write knowledgeable but participating 150-word article about Agentic AI."

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

state["draft"] = response.decisions[0].message.content material

print(f"n[Agent] Generated Article:n{state['draft']}n")

return state

def human_approval_and_tool_selection(state: ArticleState):

"""Human validates and selects which device to make use of"""

print("Out there actions:")

print("1. Publish the article (kind 'publish')")

print("2. Revise the article (kind 'revise')")

print("3. Reject and supply suggestions (kind 'suggestions')")

determination = enter("nWhat would you love to do? ").strip().decrease()

if determination == "publish":

state["approved"] = True

state["selected_tool"] = "publish"

print("Human validated: PUBLISH device chosen")

elif determination == "revise":

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = enter("Please present suggestions for revision: ").strip()

print(f"Human validated: REVISE device chosen with suggestions")

elif determination == "suggestions":

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = enter("Please present suggestions for revision: ").strip()

print(f"Human validated: REVISE device chosen with suggestions")

else:

print("Invalid enter. Defaulting to revision...")

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = enter("Please present suggestions for revision: ").strip()

return state

def execute_validated_tool(state: ArticleState):

"""Execute the human-validated device"""

tool_name = state["selected_tool"]

if tool_name in AVAILABLE_TOOLS:

print(f"n Executing validated device: {tool_name.higher()}")

tool_function = AVAILABLE_TOOLS[tool_name]

if tool_name == "revise":

# Replace the draft with revised content material

state["draft"] = tool_function(state)

# Reset approval standing for subsequent iteration

state["approved"] = False

state["selected_tool"] = ""

elif tool_name == "publish":

# Execute publish device

tool_function(state)

state["approved"] = True

else:

print(f"Error: Instrument '{tool_name}' not present in out there instruments")

return state

# --- Workflow Routing Logic ---

def route_after_tool_execution(state: ArticleState) -> Literal["approval", "end"]:

"""Route based mostly on whether or not the article was printed or wants extra approval"""

if state["selected_tool"] == "publish":

return "finish"

else:

return "approval"

# --- HITL Workflow ---

def hitl_workflow():

print("n=== Human-in-the-Loop Publishing with Instrument Validation ===")

builder = StateGraph(ArticleState)

# Add nodes

builder.add_node("generate", generate_article)

builder.add_node("approval", human_approval_and_tool_selection)

builder.add_node("execute_tool", execute_validated_tool)

# Set entry level

builder.set_entry_point("generate")

# Add edges

builder.add_edge("generate", "approval")

builder.add_edge("approval", "execute_tool")

# Add conditional edges after device execution

builder.add_conditional_edges(

"execute_tool",

route_after_tool_execution,

{"approval": "approval", "finish": END}

)

# Compile graph

graph = builder.compile()

# Save diagram

strive:

with open("hitl_workflow_with_tools.png", "wb") as f:

f.write(graph.get_graph().draw_mermaid_png())

print("Workflow diagram saved as 'hitl_workflow_with_tools.png'")

besides Exception as e:

print(f"Couldn't save diagram: {e}")

# Execute workflow

initial_state = {

"draft": "",

"authorised": False,

"suggestions": "",

"selected_tool": ""

}

graph.invoke(initial_state)

if __name__ == "__main__":

hitl_workflow()

"""Human Suggestions:

Preserve the dialogue huge and easy in order that each tech and non-tech folks can perceive

"""Agent Workflow Diagram

Agent Response

“””Understanding Agentic AI: The Way forward for Clever Help Agentic AI represents a groundbreaking development within the subject of synthetic intelligence, characterised by its means to function independently whereas exhibiting goal-directed conduct. In contrast to conventional AI methods that require fixed human intervention, Agentic AI can analyze information, make selections, and execute duties autonomously. This revolutionary know-how has the potential to rework numerous sectors, together with healthcare, finance, and customer support, by streamlining processes and enhancing effectivity. Probably the most notable options of Agentic AI is its adaptability; it learns from interactions and outcomes, constantly enhancing its efficiency. As extra companies undertake this know-how, the alternatives for personalised consumer experiences and superior predictive analytics broaden considerably. Nevertheless, the rise of Agentic AI additionally raises necessary discussions about ethics, accountability, and safety. Hanging the correct stability between leveraging its capabilities and making certain accountable utilization might be essential as we navigate this new period of clever automation. Embracing Agentic AI may basically change our interactions with know-how, finally enriching our day by day lives and reshaping industries. Article efficiently printed!

”””

Observations

This demonstration mirrored frequent HITL outcomes. With human evaluation, the ultimate article was clearer and extra correct, per findings that HITL improves AI output high quality. Human suggestions eliminated errors and refined phrasing, confirming these advantages. In the meantime, every evaluation cycle added latency and workload. The automated run completed practically immediately, whereas the HITL workflow paused twice for suggestions. In apply, this trade-off is anticipated: machines present velocity, however people present precision.

Conclusion

In conclusion, human suggestions may considerably enhance agentic AI output. It acts as a security internet for errors and may preserve outputs aligned with human intent. On this article, we highlighted that even a easy evaluation step improved textual content reliability. The choice to make use of HITL ought to finally be based mostly on context: you must use human evaluation in necessary circumstances and let it go in routine conditions.

As the usage of agentic AI will increase, the problem of when to make use of automated processes versus utilizing oversight of these processes turns into extra necessary. Laws and finest practices are more and more requiring some stage of human evaluation in high-risk AI implementations. The general thought is to make use of automation for its effectivity, however nonetheless have human beings take possession of key selections taken as soon as a day! Versatile human checkpoints will assist us to make use of agentic AI we are able to safely and responsibly.

Learn extra: Methods to get into Agentic AI in 2025?

Incessantly Requested Questions

A. HITL is a design the place people validate AI outputs at key factors. It ensures accuracy, security, and alignment with human values by including evaluation steps earlier than ultimate actions.

A. HITL is unsuitable for routine, high-volume, or time-critical duties the place human intervention slows efficiency, reminiscent of reside alerts or real-time content material filtering.

A. Human suggestions reduces errors, ensures compliance with legal guidelines and ethics, and builds belief and accountability in AI decision-making.

A. With out HITL, AI acts autonomously with velocity however dangers unchecked errors. With HITL, people evaluation drafts, enhancing reliability however including effort and time.

A. Oversight ensures that AI actions stay secure, moral, and aligned with human intent, particularly in high-stakes functions the place errors have severe penalties.

Login to proceed studying and revel in expert-curated content material.