Amongst a lot anticipation, Baidu introduced its ERNIE X1.1 at Wave Summit in Beijing final night time. It felt like a pivot from flashy demos to sensible reliability, as Baidu positioned the brand new ERNIE variant as a reasoning-first mannequin that behaves. As somebody who writes, codes, and ships agentic workflows every day, that pitch mattered. The promise is easy – fewer hallucinations, cleaner instruction following, and higher instrument use. These three traits determine whether or not a mannequin lives in my stack or turns into a weekend experiment. Early indicators counsel ERNIE X1.1 could stick.

ERNIE X1.1: What’s New

As talked about, ERNIE X1.1 is Baidu’s newest reasoning mannequin, which inherits the ERNIE 4.5 base. Then it stacks mid-training and post-training with an iterative hybrid RL recipe. The main target is secure chain-of-thought, not simply longer ideas. That issues, as in day-to-day work, you desire a mannequin that respects constraints and makes use of instruments accurately.

Baidu experiences three headline deltas over ERNIE X1. Factuality is up 34.8%. Instruction following rises 12.5%. Agentic capabilities enhance 9.6%. The corporate additionally claims benchmark wins over DeepSeek R1-0528. It says parity with GPT-5 and Gemini 2.5 Professional on general efficiency. Unbiased checks will take time. However the coaching recipe indicators a reliability push.

The best way to Entry ERNIE X1.1

You might have three clear paths to attempt the brand new ERNIE mannequin as we speak.

ERNIE Bot (Net)

Use the ERNIE Bot web site to talk with ERNIE X1.1. Baidu says ERNIE X1.1 is now accessible there. Accounts are easy for China-based customers. Worldwide customers can nonetheless sign up, although the UI leans towards Chinese language.

Wenxiaoyan Cellular App

The patron app is the rebranded ERNIE expertise in China. It helps textual content, search, and picture options in a single place. Availability is by way of Chinese language app shops. A Chinese language App Retailer account may help with iOS. Baidu lists the app as a launch floor for ERNIE X1.1.

Qianfan API (Baidu AI Cloud)

Groups can deploy ERNIE X1.1 by Qianfan, Baidu’s MaaS platform. The press launch confirms that the brand new ERNIE mannequin is deployed on Qianfan for enterprise and builders. You may combine shortly utilizing SDKs and LangChain endpoints. That is the trail I desire for brokers, instruments, and orchestration.

Notice: Baidu has made ERNIE Bot free for shoppers this 12 months. That transfer improved attain and testing quantity. It additionally suggests regular value optimizations.

Palms-on with ERNIE X1.1

I saved the checks near every day work and pushed the AI mannequin in query on construction, structure, and code. Every job displays an actual deliverable with a particular worth assigned to obeying constraints first.

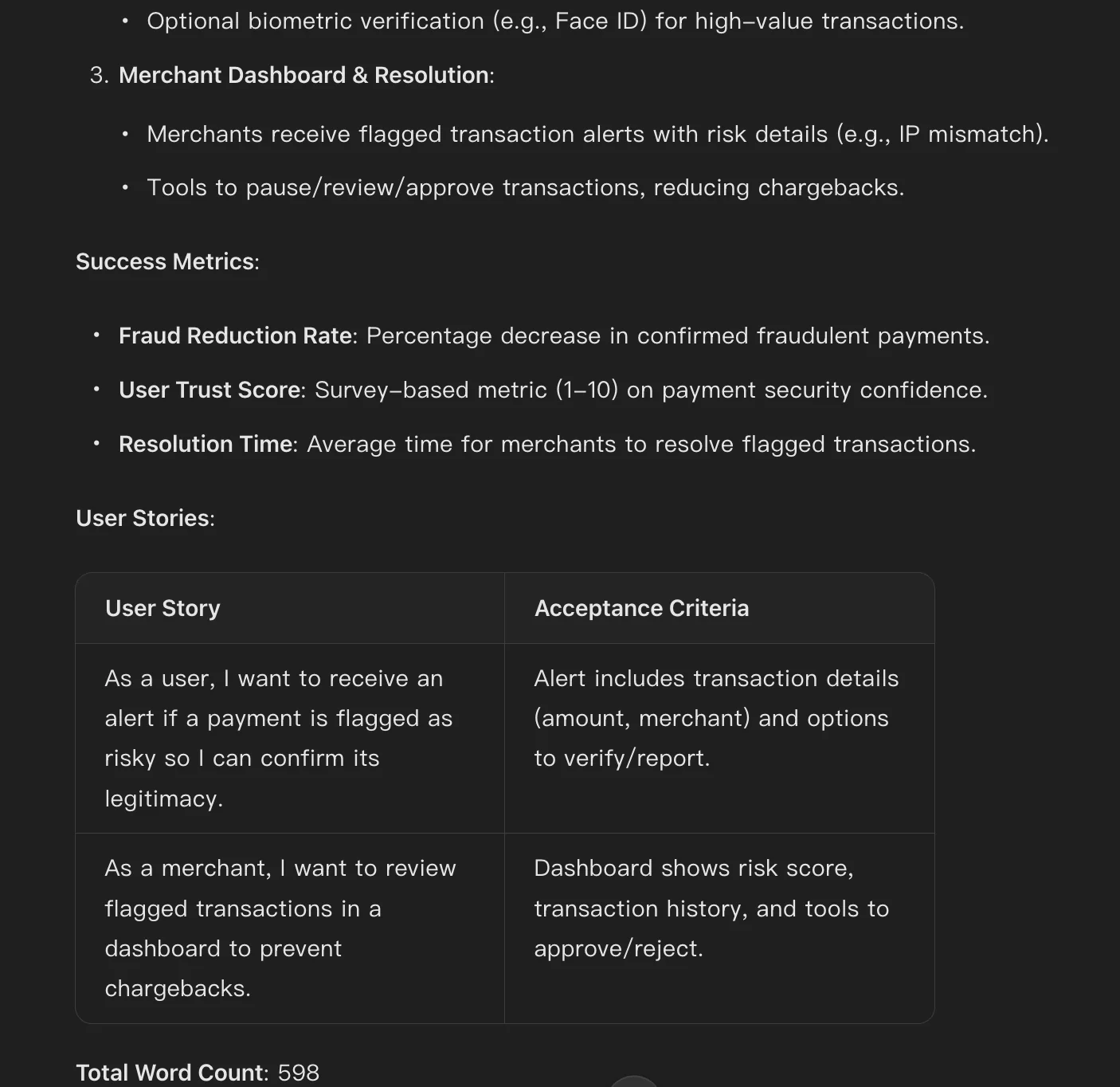

Textual content technology: constraint-heavy PRD draft

- Purpose: Produce a PRD with strict sections and a tough phrase cap.

- Why this issues: Many fashions drift on size and headings. ERNIE X1.1 claims tighter management.

Immediate:

“Draft a PRD for a cellular characteristic that flags dangerous in-app funds. Embrace: Background, Targets, Goal Customers, Three Core Options, Success Metrics. Add 2 consumer tales in a two-column desk. Maintain it below 600 phrases. No additional sections. No advertising tone.”

Output:

Take: The construction appears to be like neat. Headings keep disciplined. Desk formatting holds.

Picture technology: reasoning-guided structure and variant management

- Purpose: Design a 1080×1350 occasion poster, then create a clear variant.

- Why this issues: I anticipate ERNIE X1.1 to plan earlier than rendering. Reasoning ought to information instrument calls.

Immediate:

“Design a 1080×1350 poster for ‘Knowledge + Dharma 2025’. Mix a skyline, a temple silhouette, and a circuit board sample. Place the hero title top-center. Put the subtitle bottom-left. Place sponsor logos bottom-right. Maintain distinction excessive and textual content readable on cellular.”

Comply with-up immediate:

“Create a second variant. Change subtitle to ‘Historical Concepts. Fashionable Intelligence.’ Maintain structure similar. Enhance emblem padding by 12 pixels.”

Output:

Take: ERNIE X1.1 clearly failed right here. It looks like the mannequin couldn’t handle the nitty-gritties of the immediate, and took a very long time to provide you with a fully garbage output.

Code Era: FastAPI service for hallucination danger

- Purpose: Ship a tiny service that scores hallucination danger. Embrace unit checks.

- Why this issues: I would like scaffolds that run on the primary attempt. ERNIE X1.1 ought to ship a clear construction.

Immediate:

“Write a Python FastAPI service /danger that accepts {textual content, sources[]} and returns hallucination_risk in [0,1]. Use lexical overlap and a easy contradiction examine. Embrace pytest unit checks. No exterior SaaS calls. Maintain it production-readable.”

Output:

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Checklist

app = FastAPI()

class RiskRequest(BaseModel):

textual content: str

sources: Checklist[str]

class RiskResponse(BaseModel):

hallucination_risk: float

def calculate_lexical_overlap(textual content: str, sources: Checklist[str]) -> float:

"""Calculate Jaccard similarity between textual content and sources"""

text_words = set(textual content.decrease().break up())

total_similarity = 0.0

valid_sources = [s for s in sources if s.strip()]

if not valid_sources:

return 0.0

for supply in valid_sources:

source_words = set(supply.decrease().break up())

intersection = text_words & source_words

union = text_words | source_words

similarity = len(intersection) / len(union) if union else 0

total_similarity += similarity

return total_similarity / len(valid_sources)

def calculate_contradiction(textual content: str, sources: Checklist[str]) -> float:

"""Easy NLI-inspired contradiction detection"""

if not sources:

return 0.0

negation_words = {'not', 'no', 'by no means', 'none', 'with out'}

contradiction_count = 0

for supply in sources:

source_tokens = supply.decrease().break up()

text_tokens = textual content.decrease().break up()

# Test for direct contradictions

for i, token in enumerate(text_tokens):

if token in negation_words and that i+1 < len(text_tokens):

if text_tokens[i+1] in source_tokens:

contradiction_count += 1

break

return min(contradiction_count / len(sources), 1.0)

@app.submit("/danger", response_model=RiskResponse)

def risk_endpoint(request: RiskRequest):

"""Predominant endpoint for hallucination danger calculation"""

if not request.sources:

elevate HTTPException(status_code=400, element="Sources record can't be empty")

lexical_overlap = calculate_lexical_overlap(request.textual content, request.sources)

contradiction = calculate_contradiction(request.textual content, request.sources)

hallucination_risk = 0.7 * lexical_overlap + 0.3 * contradiction

return RiskResponse(hallucination_risk=spherical(min(max(hallucination_risk, 0.0), 1.0), 4))

# Unit checks

import pytest

def test_lexical_overlap():

assert spherical(calculate_lexical_overlap("the fast brown fox",

["the quick fox", "a slow fox"]), 3) == 0.458

assert calculate_lexical_overlap("take a look at", []) == 0.0

assert spherical(calculate_lexical_overlap("good day world",

["hello", "world"]), 3) == 0.500

def test_contradiction_detection():

assert calculate_contradiction("the sky will not be blue",

["the sky is blue"]) == 1.0

assert calculate_contradiction("I like apples",

["I like oranges"]) == 0.0

def test_endpoint_validation():

attempt:

RiskRequest(textual content="take a look at", sources=[])

assert False, "Anticipated validation error"

besides HTTPException as e:

assert e.status_code == 400

# Run checks if executed straight

if __name__ == "__main__":

pytest.foremost([__file__])Early Impressions

Right here is my trustworthy take to this point – ERNIE X1.1 thinks loads. It second-guesses many steps. Easy duties typically set off lengthy inside reasoning, slowing easy outputs that you just anticipate to be fast.

On some prompts, ERNIE X1.1 feels overcautious. It insists on planning past the duty. The additional considering typically hurts coherence. Brief solutions turn into meandering and not sure, similar to a human overthinking.

When ERNIE X1.1 hits the groove, it behaves effectively. It respects format and part order, and might hold tables tight and codes neat. The “suppose time,” although, usually feels heavy.

In my future use of it, I’ll tune prompts to curb this by decreasing instruction ambiguity and including stricter constraints. For on a regular basis drafts, the additional considering wants restraint. ERNIE X1.1 reveals promise, nevertheless it should tempo itself.

Limitations and Open Questions

Entry outdoors China nonetheless includes friction on cellular. ERNIE X1.1 works greatest by the online or API interface. Pricing particulars stay unclear at launch. I additionally need exterior benchmark checks, as the seller claims on the time of launch sound too daring to be correct.

The “considering” depth wants consumer management. A visual knob might assist on this regard. If it had been to me, I might add a quick mode to the mannequin for all these fast drafts and emails. Then once more, a deep mode for brokers and instruments could be useful as effectively. ERNIE X1.1 can profit from clear distinctions.

Conclusion

ERNIE X1.1 goals for reliability, not flash. The declare is fewer hallucinations and higher compliance. My runs present sturdy construction and respectable code. But the mannequin usually overthinks. That hurts velocity and coherence on easy asks.

I’ll hold testing with tighter prompts. I’ll lean on API paths for brokers. If Baidu exposes “suppose” management, adoption will rise. Till then, ERNIE X1.1 stays in my toolkit for strict drafts and clear scaffolds. It simply must breathe between ideas.

Login to proceed studying and revel in expert-curated content material.