In context: Some business specialists boldly declare that generative AI will quickly exchange human software program builders. With instruments like GitHub Copilot and AI-driven “vibe” coding startups, it could appear that AI has already considerably impacted software program engineering. Nonetheless, a brand new examine means that AI nonetheless has an extended approach to go earlier than changing human programmers.

The Microsoft Analysis examine acknowledges that whereas as we speak’s AI coding instruments can increase productiveness by suggesting examples, they’re restricted in actively looking for new data or interacting with code execution when these options fail. Nonetheless, human builders routinely carry out these duties when debugging, highlighting a big hole in AI’s capabilities.

Microsoft launched a brand new surroundings referred to as debug-gym to discover and handle these challenges. This platform permits AI fashions to debug real-world codebases utilizing instruments much like these builders use, enabling the information-seeking habits important for efficient debugging.

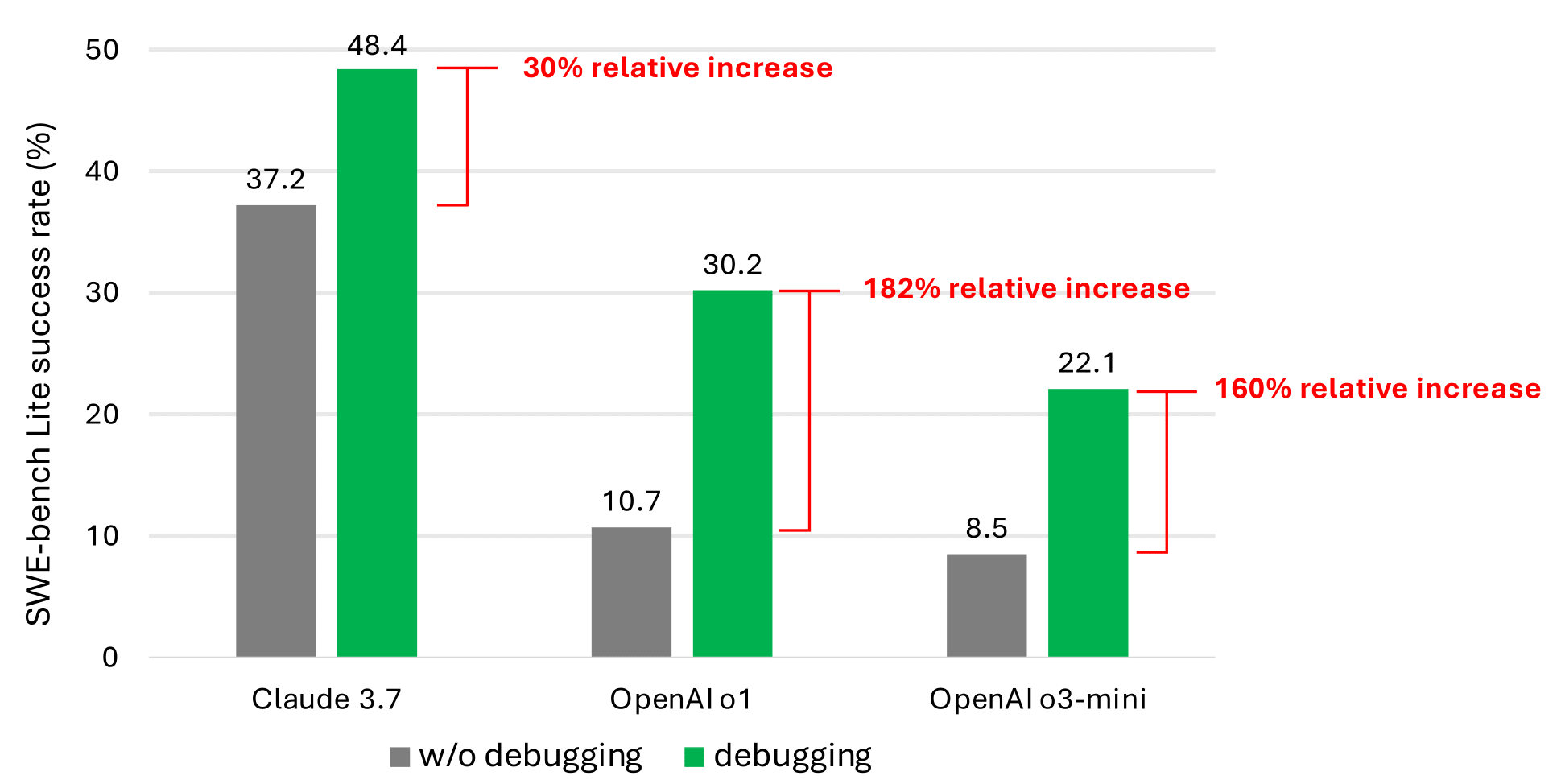

Microsoft examined how nicely a easy AI agent, constructed with present language fashions, may debug real-world code utilizing debug-gym. Whereas the outcomes have been promising, they have been nonetheless restricted. Regardless of gaining access to interactive debugging instruments, the prompt-based brokers not often solved greater than half of the duties in benchmarks. That is removed from the extent of competence wanted to exchange human engineers.

The analysis identifies two key points at play. First, the coaching knowledge for as we speak’s LLMs lacks adequate examples of the decision-making habits typical in actual debugging classes. Second, these fashions usually are not but totally able to using debugging instruments to their full potential.

“We consider that is because of the shortage of knowledge representing sequential decision-making habits (e.g., debugging traces) within the present LLM coaching corpus,” the researchers stated.

In fact, synthetic intelligence is advancing quickly. Microsoft believes that language fashions can turn into far more succesful debuggers with the precise centered coaching approaches over time. One strategy the researchers counsel is creating specialised coaching knowledge centered on debugging processes and trajectories. For instance, they suggest creating an “info-seeking” mannequin that gathers related debugging context and passes it on to a bigger code technology mannequin.

The broader findings align with earlier research, exhibiting that whereas synthetic intelligence can sometimes generate seemingly practical functions for particular duties, the ensuing code usually incorporates bugs and safety vulnerabilities. Till synthetic intelligence can deal with this core perform of software program improvement, it is going to stay an assistant – not a alternative.