The massive image: An enormous problem in analyzing a quickly rising firm like Nvidia is making sense of all of the completely different companies it participates in, the quite a few merchandise it publicizes, and the general technique it is pursuing. Following the keynote speech by CEO Jensen Huang on the firm’s annual GTC Convention this 12 months, the duty was notably daunting. As traditional, Huang lined an infinite vary of subjects over a prolonged presentation and, frankly, left various folks scratching their heads.

Nonetheless, throughout an enlightening Q&A session with business analysts a number of days later, Huang shared a number of insights that instantly made all the assorted product and partnership bulletins he lined, in addition to the considering behind them, crystal clear.

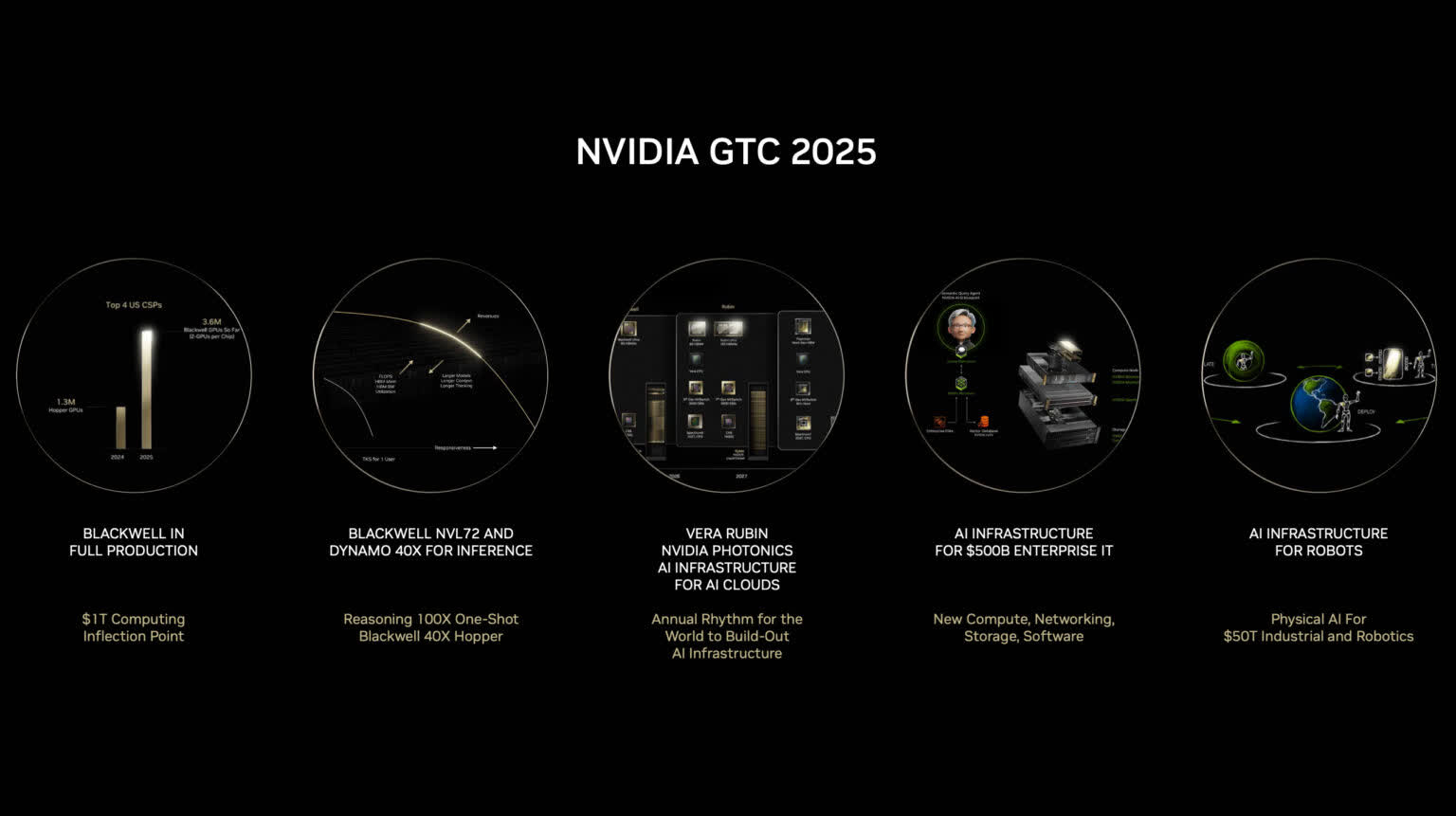

In essence, he mentioned that Nvidia is now an AI infrastructure supplier, constructing a platform of {hardware} and software program that giant cloud computing suppliers, tech distributors, and enterprise IT departments can use to develop AI-powered functions.

For sure, that is an awfully far cry from its function as a supplier of graphics chips for PC gaming, and even from its efforts to assist drive the creation of machine studying algorithms. But, it unifies a number of seemingly disparate bulletins from current occasions and offers a transparent indication of the place the corporate is heading.

Nvidia is shifting past its origins and its fame as a semiconductor design home into the essential function of an infrastructure enabler for the long run world of AI-powered capabilities – or, as Huang described it, an “intelligence producer.”

In his GTC keynote, Huang mentioned Nvidia’s efforts to allow environment friendly era of tokens for contemporary basis fashions, linking these tokens to intelligence that organizations will leverage for future income era. He described these initiatives as constructing an AI manufacturing facility, related to an intensive vary of industries.

Whereas it is a bit of a heady imaginative and prescient, the indicators of an rising information-driven financial system – and the efficiencies AI brings to conventional manufacturing are beginning to turn out to be clear. From companies constructed solely on AI providers (suppose ChatGPT) by way of the robotic manufacturing and distribution of conventional items, there’s little doubt we’re shifting into an thrilling new financial period.

On this context, Huang extensively outlined how Nvidia’s newest choices facilitate quicker and extra environment friendly token creation. He initially addressed AI inference, generally thought-about less complicated than the AI coaching processes that originally introduced Nvidia into prominence.

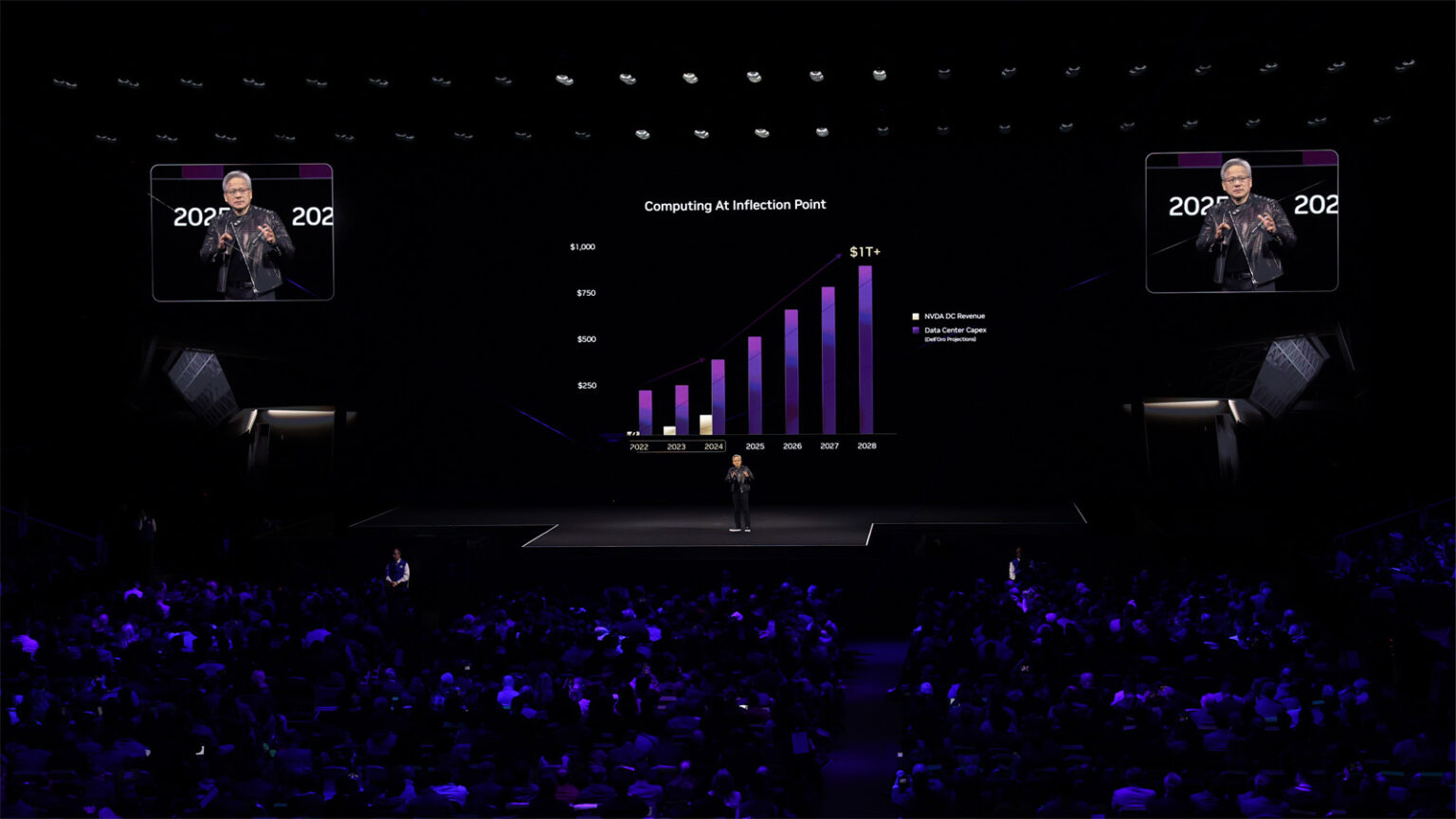

Nonetheless, Huang argued that inference, notably when used with new chain-of-thought reasoning fashions comparable to DeepSeek R1 and OpenAI’s o1, would require roughly 100 instances extra computing assets than present one-shot inference strategies. In different phrases, there is not any motive to fret that extra environment friendly massive language fashions will scale back the demand for computing infrastructure and we’re nonetheless within the early levels of the AI manufacturing facility infrastructure buildout.

One in every of Huang’s most necessary but least understood bulletins was a brand new software program device known as Nvidia Dynamo, designed to boost the inference course of for superior fashions.

Dynamo, an upgraded model of Nvidia’s Triton inference server software program, dynamically allocates GPU assets for numerous inference levels, comparable to prefill and decode, every with distinct computing necessities. It additionally creates dynamic info caches, managing knowledge effectively throughout completely different reminiscence varieties.

Working equally to Docker’s orchestration of containers in cloud computing, Dynamo intelligently manages assets and knowledge essential for token era in AI manufacturing facility environments. Nvidia has dubbed Dynamo the “OS of AI factories.” Virtually talking, Dynamo allows organizations to deal with as much as 30 instances extra inference requests with the identical {hardware} assets.

In fact, it would not be GTC if Nvidia did not even have chip and {hardware} bulletins and there have been loads this time round. Huang offered a roadmap for future GPUs, together with an replace to the present Blackwell sequence known as Blackwell Extremely (GB300 sequence), providing enhanced onboard HBM reminiscence for improved efficiency.

He additionally unveiled the brand new Vera Rubin structure, that includes a brand new Arm-based CPU known as Vera and a next-generation GPU named Rubin, every incorporating considerably extra cores and superior capabilities. Huang even hinted on the era past that – named after mathematician Richard Feynman – projecting Nvidia’s roadmap into 2028 and past.

Through the subsequent Q&A session, Huang defined that revealing future merchandise nicely prematurely is essential for ecosystem companions, enabling them to organize adequately for upcoming technological shifts.

Huang additionally emphasised a number of partnerships introduced at this 12 months’s GTC. The numerous presence of different tech distributors demonstrated their eagerness to take part on this rising ecosystem. On the compute facet, Huang defined that absolutely maximizing AI infrastructure required developments in all conventional computing stack areas, together with networking and storage.

To that finish, Nvidia unveiled new silicon photonics expertise for optical networking between GPU-accelerated server racks and mentioned a partnership with Cisco. The Cisco partnership allows Cisco silicon in routers and switches designed for integrating GPU-accelerated AI factories into enterprise environments, together with a shared software program administration layer.

For storage, Nvidia collaborated with main {hardware} suppliers and knowledge platform corporations, guaranteeing their options may leverage GPU acceleration, thus increasing Nvidia’s market affect.

And at last, constructing on the diversification technique, Huang launched extra work that the corporate is doing for autonomous autos (notably a cope with GM) and robotics, each of which he described as a part of the subsequent huge stage in AI improvement: bodily AI.

Nvidia is aware of that being an infrastructure and ecosystem supplier implies that they will profit each instantly and not directly as the general tide of AI computing rises, whilst their direct competitors is certain to extend

Nvidia has been offering parts to automakers for a few years now and, equally, has had robotics platforms for a number of years as nicely. What’s completely different now, nevertheless, is that they are being tied again to AI infrastructure that can be utilized to raised prepare the fashions that can be deployed into these gadgets, in addition to offering the real-time inferencing knowledge that is wanted to function them in the true world.

Whereas this tie again to infrastructure is arguably a comparatively modest advance, within the larger context of the corporate’s general AI infrastructure technique, it does make extra sense and helps tie collectively most of the firm’s initiatives right into a cohesive complete.

Making sense of all the assorted parts that Huang and Nvidia unveiled at this 12 months’s GTC is not easy, notably due to the firehose-like nature of all of the completely different bulletins and the a lot broader attain of the corporate’s ambitions. As soon as the items do come collectively, nevertheless, Nvidia’s technique turns into clear: the corporate is taking up a a lot bigger function than ever earlier than and is well-positioned to realize its bold aims.

On the finish of the day, Nvidia is aware of that being an infrastructure and ecosystem supplier implies that they will profit each instantly and not directly as the general tide of AI computing rises, whilst their direct competitors is certain to extend. It is a intelligent technique and one that would result in even larger development for the long run.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Analysis, LLC a expertise consulting agency that gives strategic consulting and market analysis providers to the expertise business {and professional} monetary group. You’ll be able to observe him on Twitter @bobodtech