Information Flows: Nvidia developed NVLink in 2014 instead know-how for connecting GPUs and different computing parts in servers and knowledge facilities. Not like PCI Specific, NVLink-connected gadgets use mesh-style networking communication as an alternative of counting on a central hub. Now, Nvidia is introducing an enhanced resolution tailor-made for the following technology of AI knowledge facilities.

At this 12 months’s Computex, Nvidia unveiled a brand new technology of its NVLink interconnect know-how. Referred to as NVLink Fusion, the know-how is designed to assist semi-custom AI infrastructure and knowledge middle deployments. The corporate has already partnered with a formidable record of trade leaders working to undertake the brand new silicon in their very own merchandise.

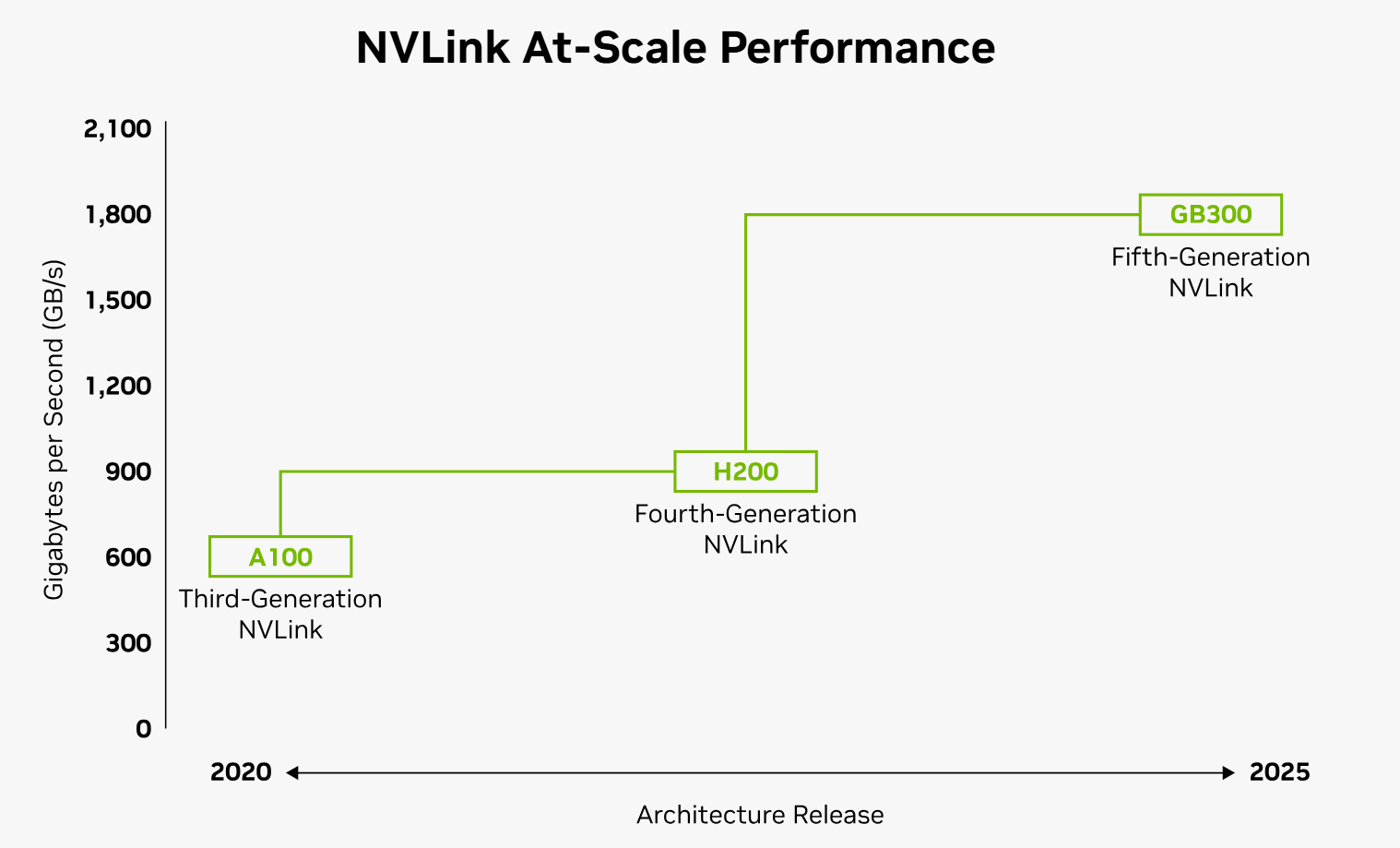

Alongside the not too long ago launched Blackwell Extremely GPU, NVLink Fusion is a key part in Nvidia’s imaginative and prescient of “AI factories.” The fifth technology of NVLink is built-in into two compute-dense rack fashions: the GB200 NVL72 and the GB300 NVL72. Collectively, NVLink Fusion and Nvidia’s specialised racks can ship a complete knowledge bandwidth of 1.8 TB/s per GPU – 14 instances sooner than conventional PCIe Gen5 connections.

| Third Era | Fourth Era | Fifth Era | |

|---|---|---|---|

| NVLink bandwidth per GPU |

600 GB/s | 900 GB/s | 1,800 GB/s |

| Max variety of hyperlinks per GPU |

12 | 18 | 18 |

| Supported Nvidia architectures |

Ampere | Hopper | Blackwell |

Nvidia CEO Jensen Huang described NVLink Fusion as a strong strategy to deliver new know-how companions into the corporate’s AI platform and broader {hardware} ecosystem. For the primary time in a long time, Huang mentioned at Computex, knowledge facilities are being reimagined and re-architected to embed AI into nearly each computing platform.

Nvidia’s companions for NVLink Fusion adoption embody MediaTek, Marvell, Alchip Applied sciences, Astera Labs, Synopsys, and Cadence. Moreover, Fujitsu and Qualcomm Applied sciences are utilizing the answer to combine their CPUs with Nvidia GPUs, enabling the event of high-performance computing techniques.

NVLink Fusion could be scaled up in large cloud supplier setups, Nvidia mentioned, placing “hundreds of thousands” of GPUs in charge of any ASIC resolution. As highlighted by Synopsys CEO Sassine Ghazi, knowledge facilities at the moment are changing into simply AI platforms that want to maneuver large datasets faster and in essentially the most environment friendly manner.

NVLink Swap chips can ship 130 TB/s of bandwidth in a single 72-accelerator NVLink area (NVL72), Nvidia explains, whereas the NVLink-C2C chip-to-chip interconnection can be utilized to construct new built-in merchandise.

NVLink Fusion ought to supply ample customization choices to ASIC and silicon producers. On the identical time, Nvidia is providing a enormously built-in product stack with its Mission Management orchestration software program part. Because of this unified management middle, enterprise prospects can automate the complicated administration duties required to run a modernized AI infrastructure.