Reducing corners: In a stunning flip for the fast-evolving world of synthetic intelligence, a brand new research has discovered that AI-powered coding assistants may very well hinder productiveness amongst seasoned software program builders, fairly than accelerating it, which is the principle purpose devs use these instruments.

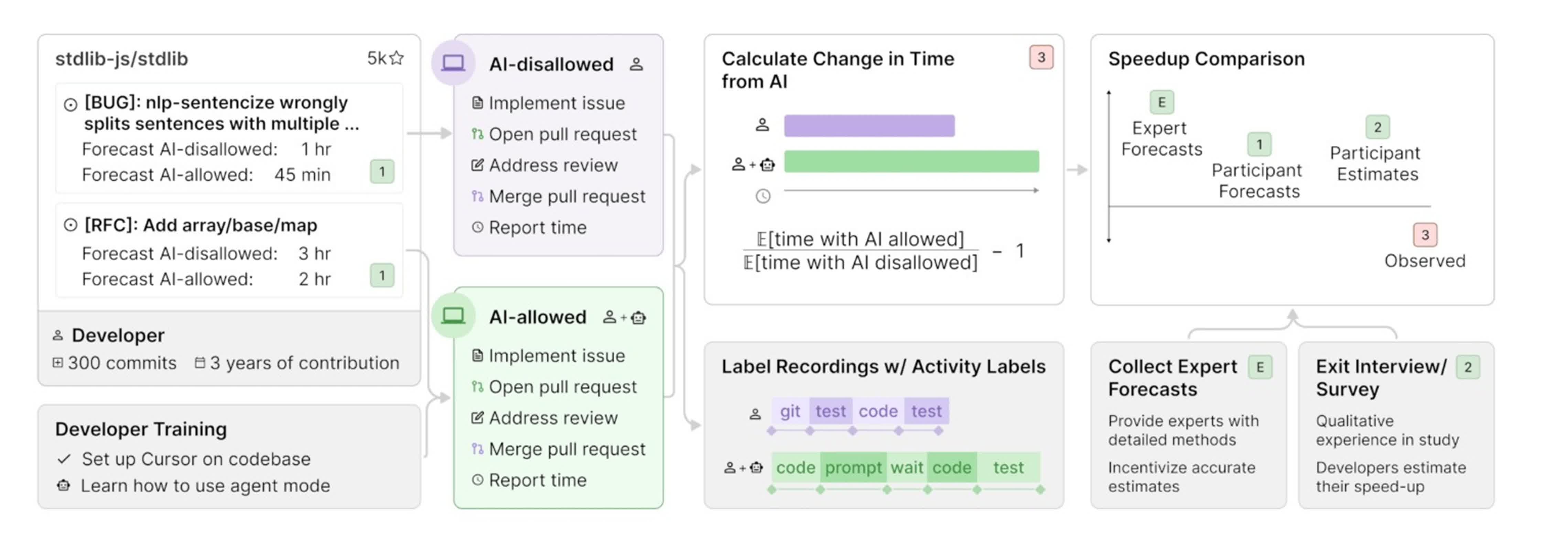

The analysis, carried out by the non-profit Mannequin Analysis & Menace Analysis (METR), got down to measure the real-world impression of superior AI instruments on software program growth. Over a number of months in early 2025, METR noticed 16 skilled open-source builders as they tackled 246 real programming duties – starting from bug fixes to new function implementations – on giant code repositories they knew intimately. Every process was randomly assigned to both allow or prohibit using AI coding instruments, with most members choosing Cursor Professional paired with Claude 3.5 or 3.7 Sonnet when allowed to make use of AI.

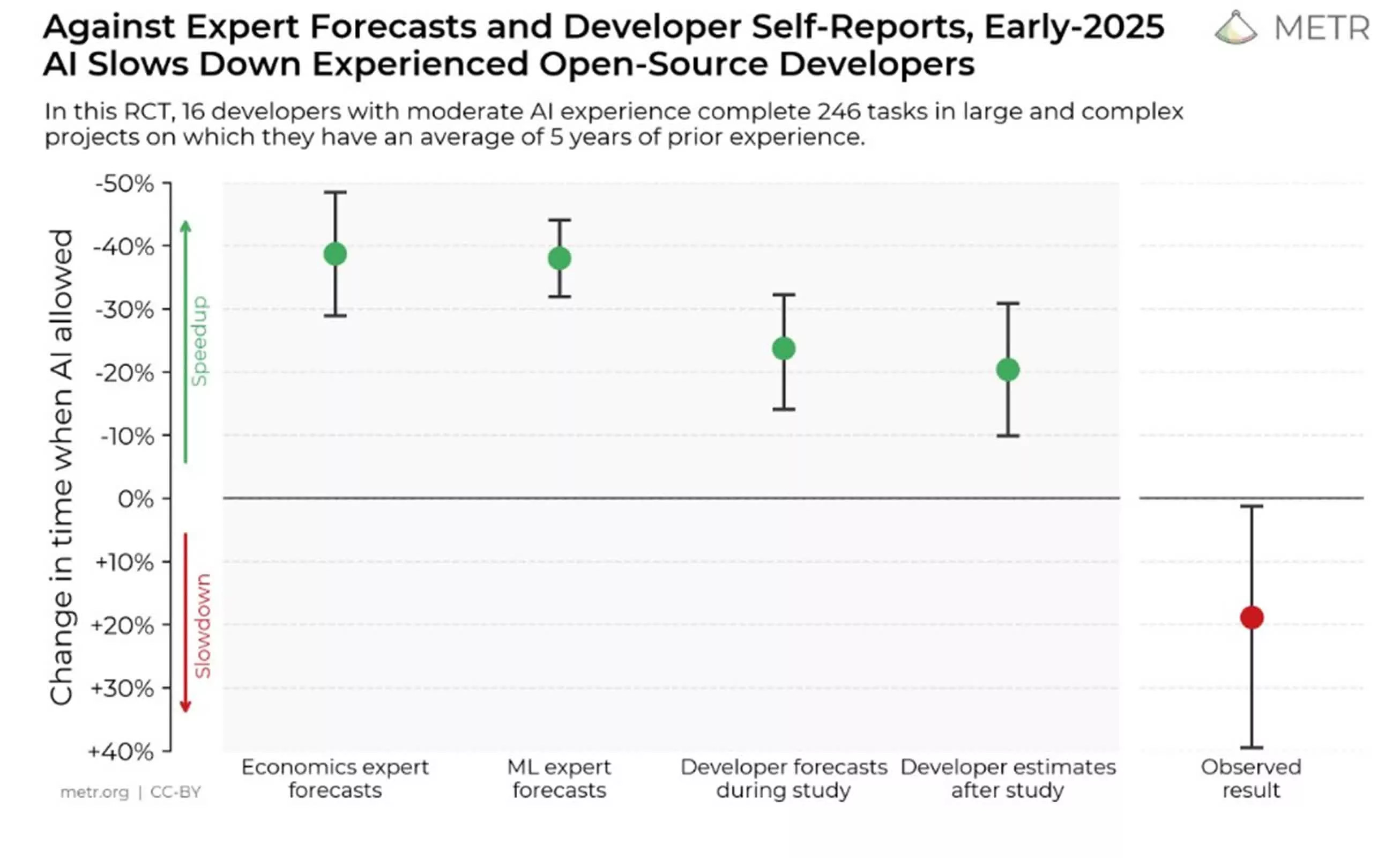

Earlier than starting, builders confidently predicted that AI would make them 24 % quicker. Even after the research concluded, they nonetheless believed their productiveness had improved by 20 % when utilizing AI. The truth, nonetheless, was starkly completely different. The information confirmed that builders really took 19 % longer to complete duties when utilizing AI instruments, a consequence that ran counter not solely to their perceptions but in addition to the forecasts of specialists in economics and machine studying.

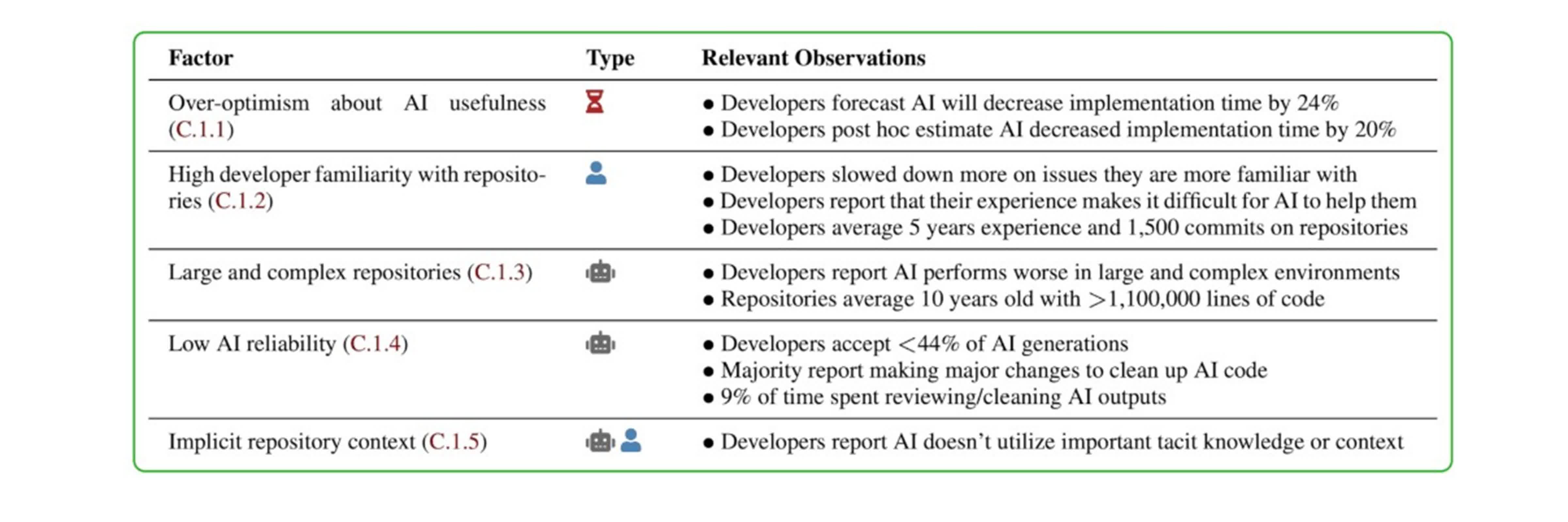

The researchers dug into potential causes for this surprising slowdown, figuring out a number of contributing elements. First, builders’ optimism concerning the usefulness of AI instruments typically outpaced the know-how’s precise capabilities. Many members have been extremely aware of their codebases, leaving little room for AI to supply significant shortcuts. The complexity and dimension of the tasks – typically exceeding one million traces of code – additionally posed a problem for AI, which tends to carry out higher on smaller, extra contained issues. Moreover, the reliability of AI recommendations was inconsistent; builders accepted lower than 44 % of the code it generated, spending important time reviewing and correcting these outputs. Lastly, AI instruments struggled to understand the implicit context inside giant repositories, resulting in misunderstandings and irrelevant recommendations.

The research’s methodology was rigorous. Every developer estimated how lengthy a process would take with and with out AI, then labored by the problems whereas recording their screens and self-reporting the time spent. Contributors have been compensated $150 per hour to make sure skilled dedication to the method. The outcomes remained constant throughout numerous final result measures and analyses, with no proof that experimental artifacts or bias influenced the findings.

Researchers warning that these outcomes shouldn’t be overgeneralized. The research centered on extremely expert builders engaged on acquainted, advanced codebases. AI instruments should still supply larger advantages to much less skilled programmers or these engaged on unfamiliar or smaller tasks. The authors additionally acknowledge that AI know-how is evolving quickly, and future iterations may yield completely different outcomes.

Regardless of the slowdown, many members and researchers proceed to make use of AI coding instruments. They observe that, whereas AI might not at all times velocity up the method, it will probably make sure points of growth much less mentally taxing, reworking coding right into a process that’s extra iterative and fewer daunting.